So you’ve played around with RunSFM and produced some cool looking point clouds. But let’s go even further and create a textured mesh model for extra coolness. TextureMesh is a program to do texture mapping on a 3D mesh for this very purpose. It’s designed to be used in conjunction with RunSFM and Meshlab. The workflow is as follows:

RunSFM -> Point cloud from PMVS -> Meshlab -> TextureMesh -> ViewMesh or MeshLab

The end result can be seen in this video.

TextureMesh uses the files inside the pmvs directory, plus an additional mesh ply that you’ll have to create manually, and determines a suitable texture mapping for each triangle face based on camera information. Since a face can be assigned to more than one camera in most cases, it uses a fancy Loopy Belief Propagation algorithm to smooth out the assignment. Without this step you’ll see lots of inconsistent illumination of the surface.

Download

Last update: 10/08/2013

TextureMesh-0.2.1.tar.gz (added Wavefront OBJ support, can now load in MeshLab)

Latest Windows binary compiled using Visual Studio 2010:

Compiling

You can simply type make to compile for both programs or alternatively open it up in CodeBlocks.

Usage

TextureMesh requires an additional mesh.ply file be created and placed in

pmvs/models/mesh.ply

Instructions for creating said file is described in the section below. Once you created that file you can then call TextureMesh as follows:

./TextureMesh [pmvs_dir]

where pmvs_dir is the directory that PMVS generates. Then view the output by

./ViewMesh [pmvs_dir]

Instructions for using ViewMesh is shown on screen. Alternatively, the model can be views in Meshlab by loading [pmvs_dir]/models/output.obj.

Limitations

TextureMesh loads all the images into memory even if parts of the image is not used. It will shrink the image if the width is larger than 512 pixels. This is a hard coded value. Hopefully you don’t run out of memory!

Sample dataset

Here is the same castle dataset used in the video. The images came from http://cvlab.epfl.ch/~strecha/multiview/denseMVS.html

castle-data.7z (53.4 MB)

You can run either TextureMesh or ViewMesh on it to verify things are working.

License

Simplified BSD license. See LICENSE.txt inside the package.

How to create a mesh model in Meshlab

The following instruction assumes you already have existing directory where you ran RunSFM on. The first step is to create a 3D mesh from. Go to your project directory and open up pmvs/models/option-0000.ply in Meshlab. You’ll want to do a Poisson Surface reconstruction. Here’s how I typically do it in Meshlab

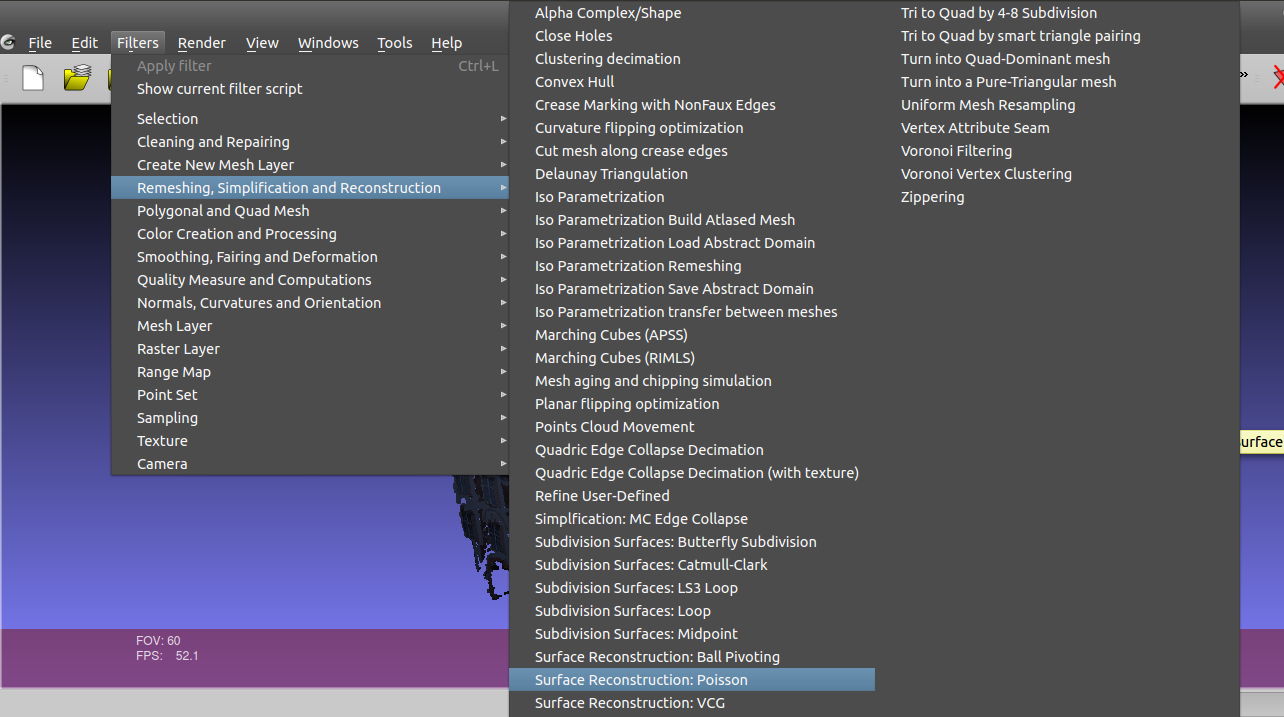

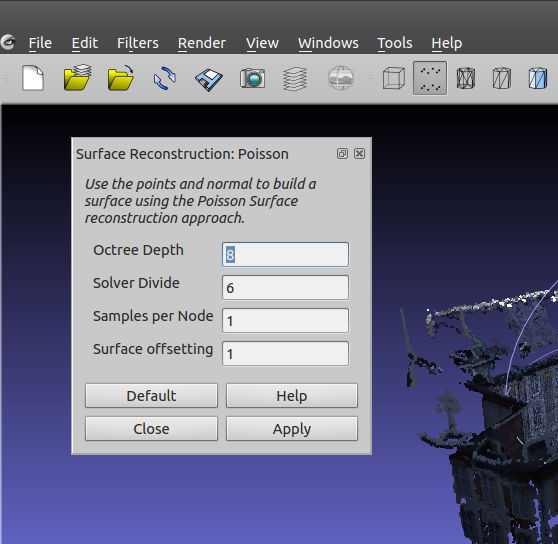

Perform a Poisson surface reconstruction as shown

Enter 8 for Octree Depth. I find the default 6 too coarse.

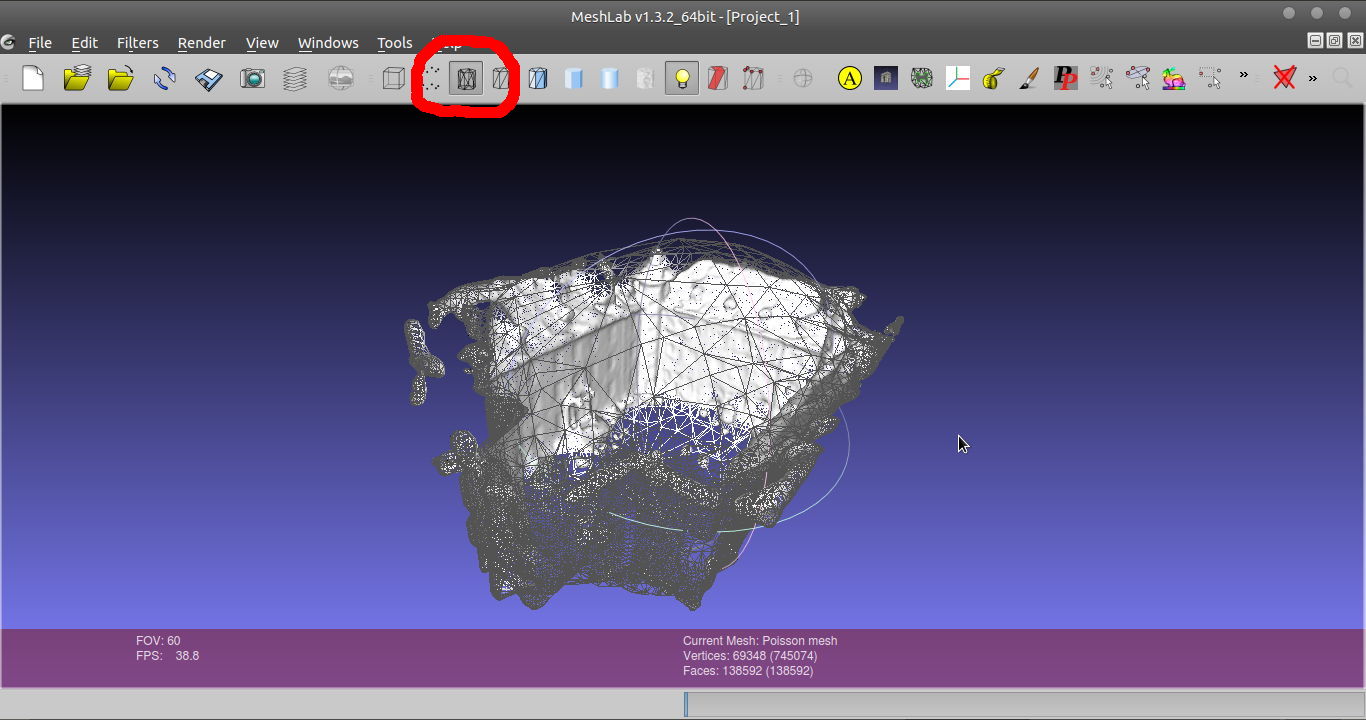

Hit Apply, then Close. I find that UI aspect of Meshlab a bit silly, having to click Close. Click the button circled in red to see the mesh.

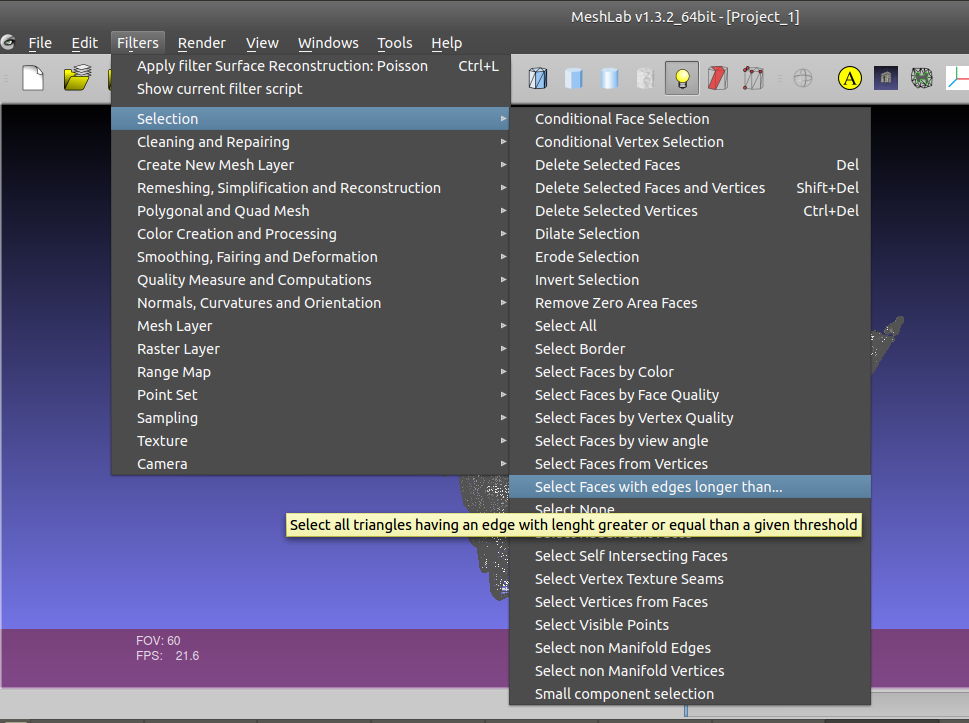

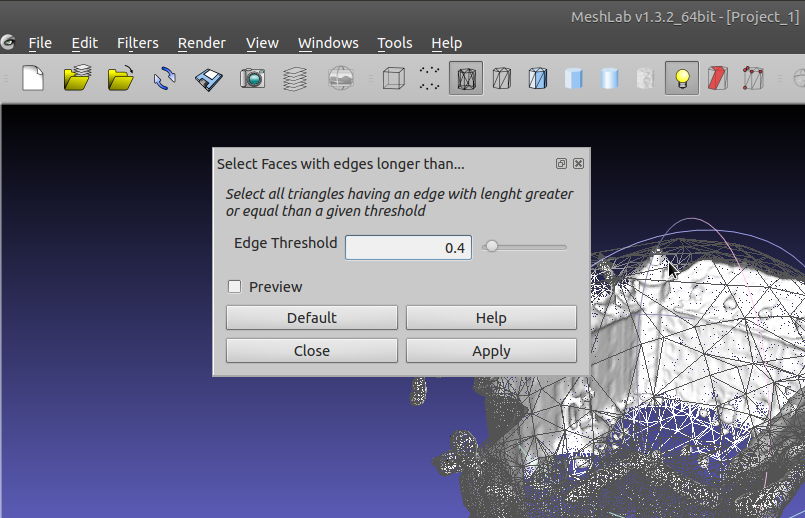

You’ll notice that the mesh expands across space where it shouldn’t. Lets delete them. Select the ‘Select Faces with edges longer than …’ option.

I tend to pick a value around 0.5. Pick a value and click Apply, Close.

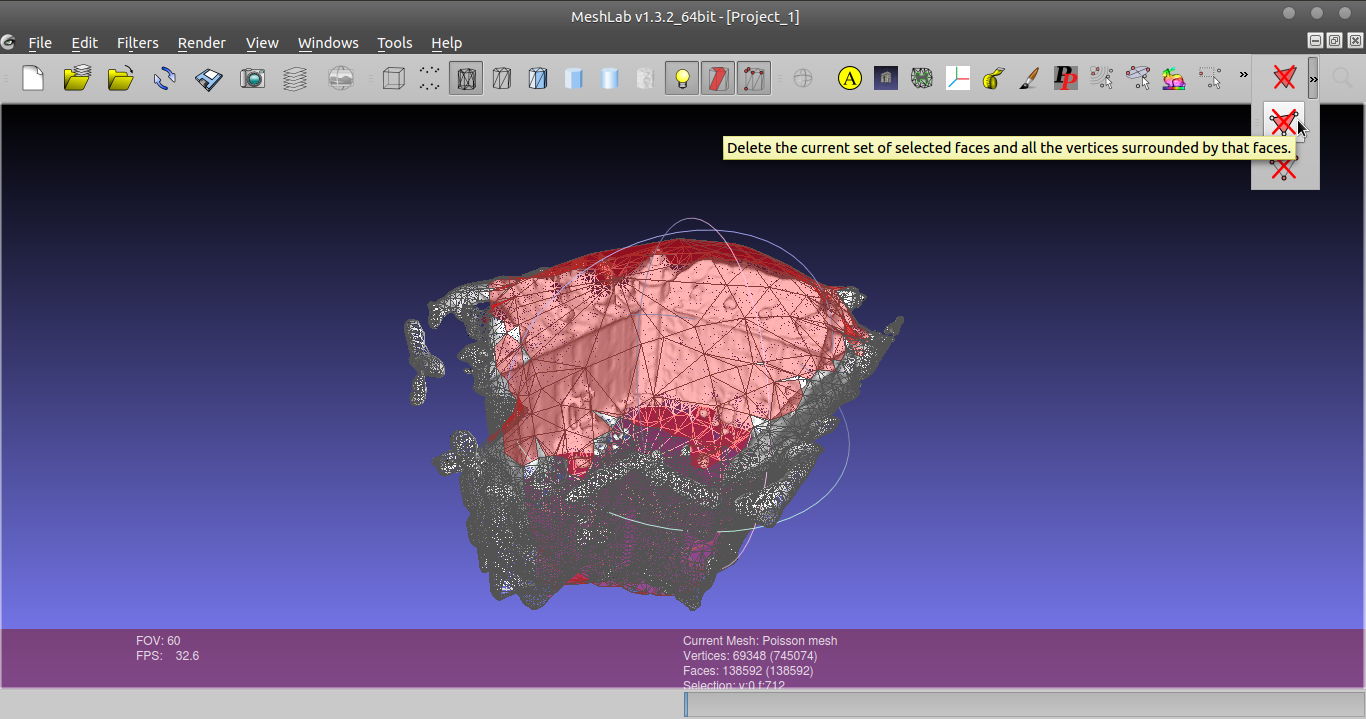

You’ll see the large faces highlighted. If you’re not happy with the choice you’ll have to go to Filter -> Selection -> Select None and redo. Once you are happy delete the faces and vertex via the button shown or Shift + Del.

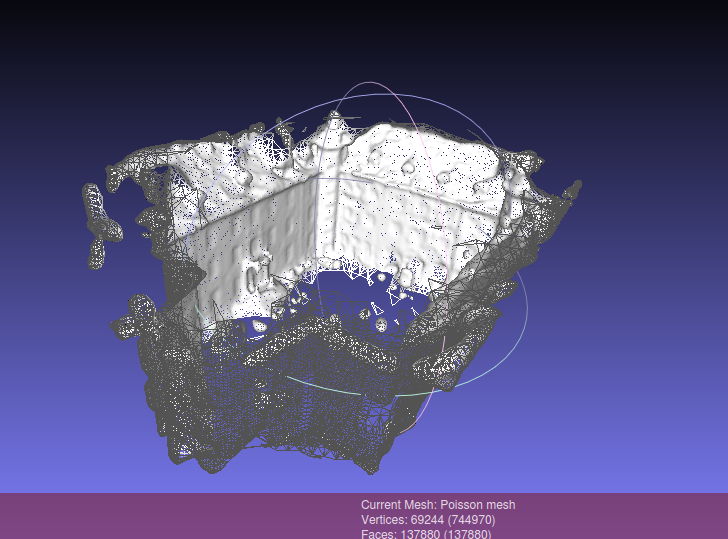

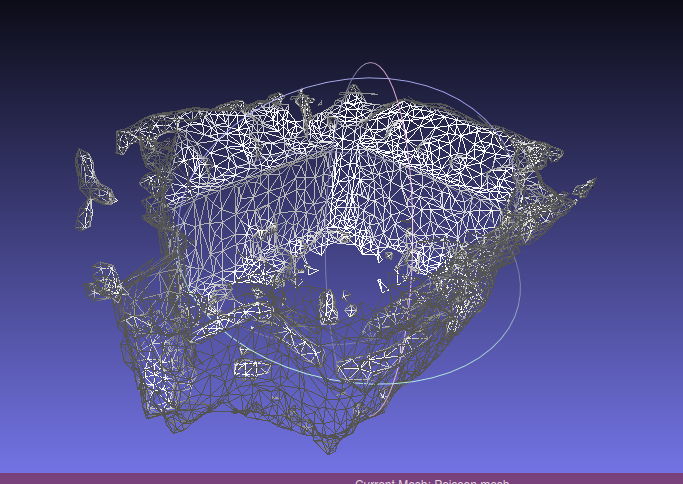

Looking good so far.

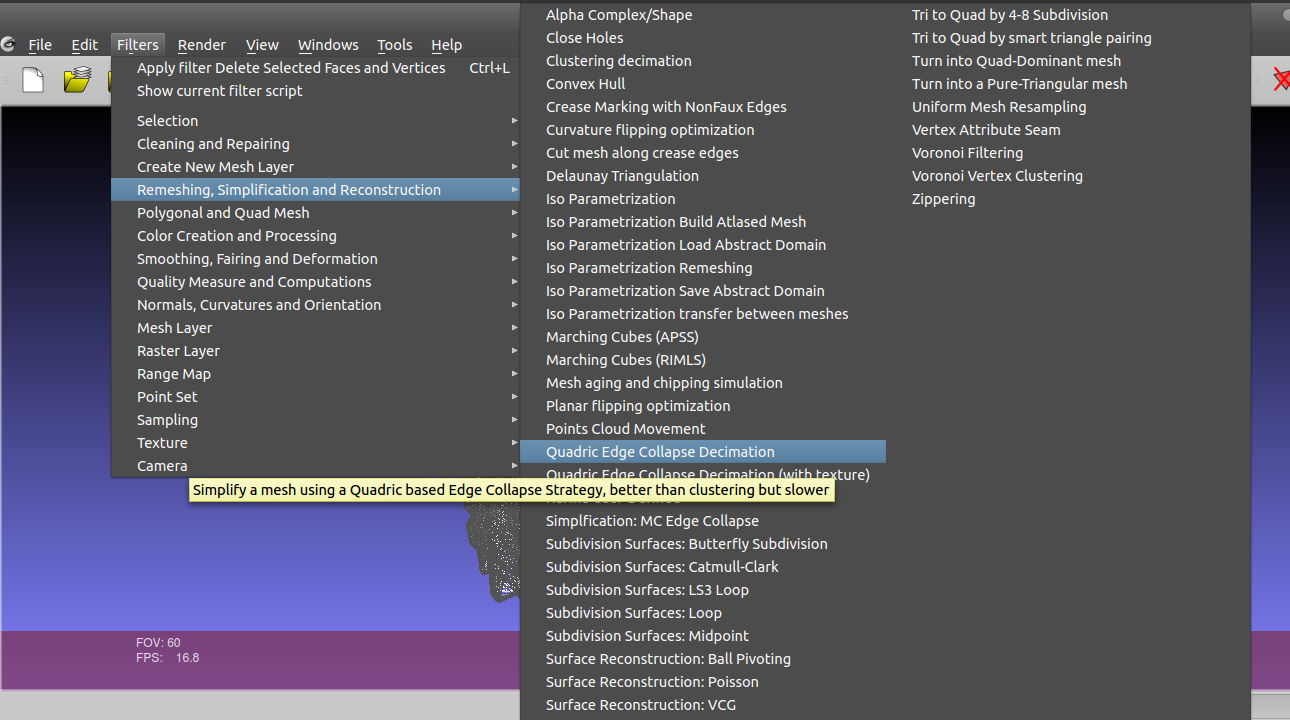

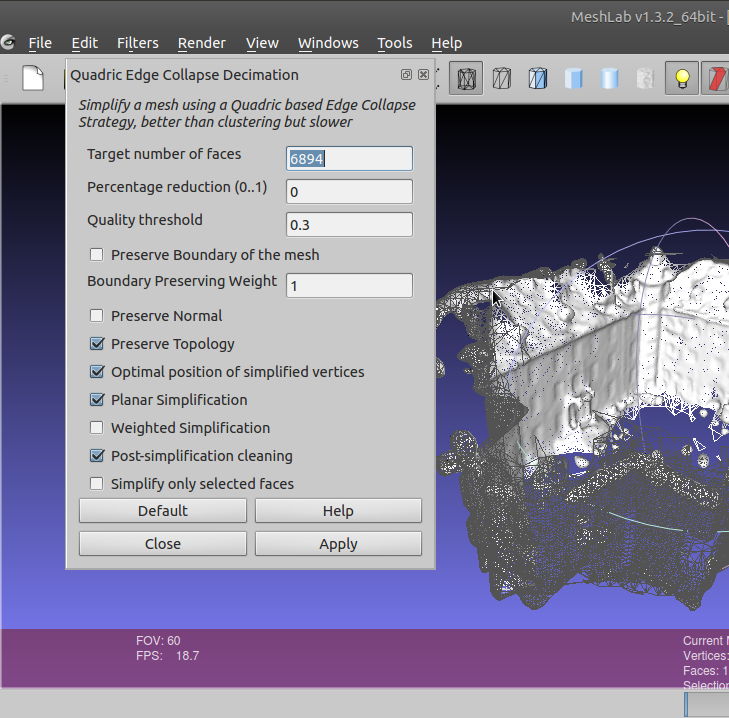

But the mesh is denser than it really needs to be, which slows down rendering. Lets decimate it using Quadric Edge Collapse Decimation.

By default it will reduce the mesh to 10% of the original size. In this example I take it down to 1% (6894 faces) by deleting the last digit of the default value. I also tick Preserve Topology and Planar Simplification.

A simpler mesh model.

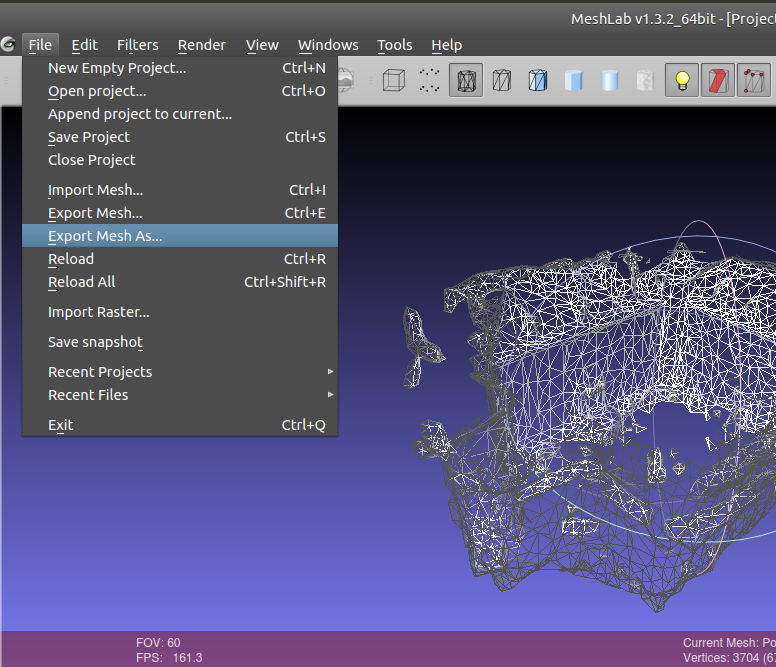

There are some floating bits and pieces here that we can clean up but for now we’ll just save it as is. Save the mesh via File -> Export Mesh.

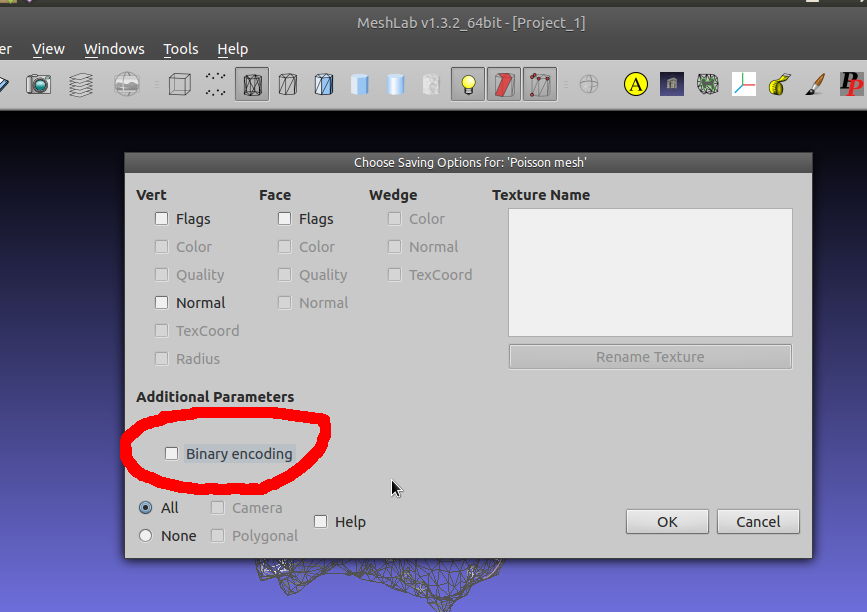

Save the mesh as mesh.ply. It HAS to be this name. Make sure to untick binary encoding and you’re done!

Hello,

thank you for your code!

is it possible that your TextureMesh zipped file is corrupted? I can’t unzip it (while for example with Viewmesh file I can).

Can you suggest me something (or also resend it to me by email)?

Thanks!

Fixed now. It was actually a bz2 file, with the wrong extension. I’ve re-uploaded so it’s a correct tar.gz file.

Hi Nghia,

Wanted to post on the page of RUNsfm, but it was closed. I am not at all familar to Ubuntu. Do you think your newest version, RunSFM-1.4.3, can work under Ubuntu 12.04? The Ubuntu 11.04 seems too old. If can, what packages should be installed prior to using RUNsfm? Thanks!

Thanks for making me aware of the closed comment. The newest version works under 12.10, which I am running. So it should work with 12.04.

Hi Nghia,

I am glad to tell you that now the RunSFM works well. But when compiling TextureMesh I ran across the following message. I have installed opencv 2.3 and libann-dev. What seems to be the problem? Kindly answer!

Xiaoming

peng@ubuntu:~/sFM/TextureMesh$ make

test -d bin/Debug || mkdir -p bin/Debug

test -d obj/Debug || mkdir -p obj/Debug

g++ -Wall -g -c ANNWrapper.cpp -o obj/Debug/ANNWrapper.o

g++ -Wall -g -c LoopyBP.cpp -o obj/Debug/LoopyBP.o

g++ -Wall -g -c main.cpp -o obj/Debug/main.o

g++ obj/Debug/ANNWrapper.o obj/Debug/LoopyBP.o obj/Debug/main.o -lANN -lopencv_core -lopencv_highgui -o bin/Debug/TextureMesh

/usr/bin/ld: cannot find -lANN

collect2: ld returned 1 exit status

make: *** [out_debug] Error 1

libANN needs to be installed manually, get it from http://www.cs.umd.edu/~mount/ANN/

it’ll generate a file called libANN.a, copy it to /usr/local/lib, run ldconfig, and try again.

Thanks a lot! It now works. One more question: I am now trying to do the inverse thing—using a textured 3d model generated using TextureMesh to render arbitrary 2d views of the model. Any suggestions?

In the code I use a camera class to determine the position and viewing direction. You can modify that to your need, and use glReadPixels to get a screenshot and dump it to disk.

Thank you for your immediate reply. Could you please get more specific about the code for “camera class to determine the position and viewing direction”?

Oh my bad, I mean the ViewMesh program. Check the code in there

Hello. Thanks for you code! I found the color space was wrong in the ViewMesh tool. Because the color space in OpenCV is BGR, while RGB in OpenGL. So in the ViewMesh tool, we should add “cv::cvtColor(img,img,CV_BGR2RGB);” to convert the color space to display the origin color of the texture image. Thanks!

It’s a bit weird. I didn’t have to do the conversion for one dataset but another I did (I think). Another user found the same issue as yours.

I am trying to run texture mesh on widows machine, how do I go about in getting it work. I have several RunSFM projects which I can use your code on. I have also installed codeblocks and GCC compiler.

You’ll need ANN library (http://www.cs.umd.edu/~mount/ANN) and OpenCV installed. Modify the CodeBlocks project to point to those libraries and you should be able to compile an exe.

So I need to put opencv folder and ann folder in C:\. Then in codeblocks do i need to add these libraries by Library Finder?

Add to the “Search directories”, one under the Compile tab (header files) and the other under Linker (lib files).

Then link them with the Windows lib in “Linker settings”. All these options are found by right clicking the project, select “Build options”.

I am getting this error after what doing what you told above: fatal error ANN/ANN.h: No Such File or directory.

I am using GNU GCC Compiler.

Also is it possible for you post the .exe?

Thats from the ANN library, there’s a windows lib version on their page. Do the same thing as OpenCV, header directory + lib directory. I don’t have an exe since I rarely use Windows at home. If you get stuck I can get around to making one but it’ll have to wait.

Thanks again but tt is getting out of my knowledge, since I am not a programmer, but I have been using your RunSFM for creating point clouds of structures. But this texturemesh and viewmesh is very good since it makes the result look nice. So i would like to have exe for texturemesh and viewmesh, but please take time its no hurry.

Its still not compiling for me, I am not a programmer thats why i probably will need the exe for texture mesh and view mesh. Please post them whenever you have time, its no hurry.

Just made some exe, link above.

On a windows machine, how do i go about running your exe?

From the command prompt.

Very cool software. After running through the python photogrammetry toolbox, it outputs into 4 different option files (option-0000, option-0001, option-0002, option-0003). Is your code written so that it can interpret all of them? From what I can tell is it takes my first option file (with associated images) and stitches them on the entire mesh (which should encompass all the options). Is it as simple as adding ALL the photo numbers to the “timages” tagline in the first option file?

Given, I haven’t looking much at your viewer since it worked right off the bat, but how easy is it to produce this output into something Meshlab or Blender can understand? So far the viewer does very well, but I’d like to manipulate it further in another application.

Thanks for the help and awesome stuff!

You’re right that I only read only one ‘option’ file, this is more due to laziness than anything 🙂 I’ve updated the code to support this now.

It should be trivial to make it work with Meshlab/Blender, but I don’t know what format should be used (haven’t researched much). If you find a suitable file format, that is easy to implement, let me know and I’ll add it to code.

Thanks for the quick response. I downloaded the new executable with no luck. It still only ran through the first option file. Is there an option I have to give it?

In regards to Meshlab… I notice that the two files are a .mesh and a .point. I’m not sure how the viewer is interpreting the .mesh and the .point file is binary. So, if it’s easy to interpret this stuff with Blender or Meshlab, i sure don’t know how to do it. I typically work with ply files in Meshlab, but they do not support textures to my knowledge. How hard would it be to output a DAE (collada) file with. I was thinking something like “TextureMesh.exe [path to pmvs] -DAE” …. or something like that.

Oh just realised I didn’t update the Windows binary. I’ll get around to that soon.

I’m not familiar with collada but I do recall some people on the Meshlab being able to texture map their meshes just can’t remember how. I’ll have a check this weekend.

Awesome, thanks! Let me know when you get a chance to upload it.

I’ve already used meshlab to texture a mesh, but yours is was better, and it’s automated (which meshlab doesn’t do). However, the only current way for me to view the textured mesh from your software is to use your viewer. I was hoping to output to a common format that could be viewed in other applications.

Thanks for your help!

Just uploaded the updated TextureMesh.exe. I think the OBJ file format supports texture mapping, I’ll have a look into it when I’m free.

Just ran the new version and the results are very impressive! Let me know if you get the OBJ working. I think this work is ahead of the curve w.r.t. texturing. Its definitely the best I’ve seen out there. I think your last link is getting it portable to other viewers (OBJ, etc.). Nice work and thanks for the help!

Hi there

Thanks for creating this nice mesh texturing code, I have been playing around with it in my research! I also had problems visualizing the mesh well and wanted to export it to a standard format, so I have written a Matlab function which converts the .mesh file created by TextureMesh into a standard Wavefront OBJ file and associated MTL materials file. The textured mesh can then easily be viewed in Meshlab and other 3D viewers.

Email me if you are interested and I can send you my script!

Alex

Email sent!

Hi, Alex. That’s awesome. Nghia, if Alex is OK with it, would you mind emailing me his script?

I’ve just implemented this code in TextureMesh now. I’ll package it up and upload for Windows after I get some sleep 🙂

Code and binary is updated, try it out.

I just ran it, and it looks awesome! Thanks for the help! Truly impressive stuff.

Thanks, for testing it on Windows for me 🙂

Great blog by the way. I always find something interesting here! I was wondering if it would be possible to create a release with the hard coded 512 max pixel dimension disabled or increased (i.e. up to 4000 pixels at least). I am using workstations with dual Xeons and 64GB+ of RAM so memory allocation shouldn’t be a problem. I think it would be interesting to see what could be achieved with your code (we work with big meshes lots of off images to be textured: Meshlab texturing is very unstable for these unfortunately). I’m working with a Windows OS at the moment as this is what our modelling software is built for.

Thanks

Thomas

That’s a pretty serious machine 🙂 Does your graphics card support up to 4096×4096 textures? I’ve put very little error hardware checking. Let me setup my Windows box and get back to you.

They are running with Quadro 6000s I think (they where put together for reservoir modelling but they also chew through image processing tasks on the gpu like anything 😉 ). I’ve processed a few really big SfM-MVS datasets on them (one being ~3500 images) but I had to implement an image set segmentation routine to get around the computational complexity / matching error issues with the pairwise matching (this calculates specified matches for Changchang’s software). The models are large scale (1000m2 +) and better than our Reigl scanner in terms of surface noise and point density, though missing patches in shadowed areas can be a bit of an issue. They also have way too many points to do anything useful with which is why I am looking to mesh, decimate and texture map.

Thanks for your help Nghia!

Thomas

Ps Just checked. Quadro 5000-6000 support 16k x16k textures.

Try this

http://nghiaho.com/uploads/code/ViewMesh4096.exe

Thanks Nghia. I will give it a try at Uni today.

Thomas

Hi Nghia

I’m getting an error when running standard TextureMesh from Git Bash in windows:

home-pc /c/NghiaHo/TextureMesh

$ ./TextureMesh [/c/cave/new.nvm.cmvs/00]

Error opening [/c/cave/new.nvm.cmvs/00]/visualize/00000000.jpg

mesh.ply is placed in the pmvs output directory ([/c/cave/new.nvm.cmvs/00/models). As far as I can tell all precompiled libraries are in the TextureMesh folder (I take it all these come with the windows binary release?). I was trying to use an pmvs output from a VisualSFM project. Could this perhaps be the problem?).

Thomas

Remove the [ ] brackets. It’s not meant to be there, despite what the onscreen instruction may imply.

Apologies. I do not use bash very often. Will retry it

If you manage to get it running take some screenshots! Sounds like you got really quality stuff.

Hey, Nghia!

The algorithm is awesome, i’m quite impressed it works so well, even on large datasets. Though using large number of images produces color artifacts caused by the subtle lightning changes between each shot.

I have two questions:

1. Do you have an idea how can these borderline pixels be blended with each other?

2. Do you know some kinf of procedure to UV map these texture coordinates onto a single image?

appreciate it very much, and keep up the good work!

Andrew

Hi,

I’m glad you like it 🙂 The problem you bring up is a difficult one. My knowledge in computer graphics is rather limited. But I’ve seen some impressibe blending techniques for panoramic images used by Hugin (enblend specifically). Not sure how hard it would be to adapt. As for the 2nd question I’ve wondered about this myself. In theory, the mesh generated by the poisson reconstruction process produces a manifold, meaning it can be unfolded out to a single 2D sheet of paper. You then just fill up this paper with the correct pixels from the individual texture images. Else a very naive way would be to treat each triangle individually, do a cut and paste from the original texture image, and try to lay them out in your bigger single image (not very efficiet due to empty gaps).

I am an user of the mentioned enblend and had the same thoughts too. Shouldn’t it be possible to use enblend for blending?

A possible solution could be not to choose one of the available textures but do some mean value calculation, i.e. not to choose the image with the highest probability, but instead mix all available textures weighted with its probability. But I think, this would be a greater task.

Hello Nghia,

I have found a bug in your TextureMesh V0.2.0

In Function LoadPMVSPatch() in line 369 there is an superfluous reading of a number. This leads to an page fault.

input.getline(line, sizeof(line)); // possibly visible in

str.str(line);

str.clear();

str >> num;

input.getline(line, sizeof(line)); // image index

str.str(line);

str.clear();

str >> num; <——————————— Superfluous. Not there in the input file!

for(int j=0; j > idx;

pt.visible_in.push_back(idx);

}

Can you send me the file that is causing it to crash? It appears correct on all the files I’ve tested.

Sorry, I have not seen your response, because I have not got any mail. Do you have an email-address where I should send the file to?

I’ve verified the bug and updated the package. Thanks for that.

Hi,

First of all, thanks for posting TextureMesh!

I’ve followed all the steps carefully, but when I run TextureMesh on mesh.ply I get the following error:

ANN: ERROR:—> Requesting more near neighbors than data points <— ERROR

Can you please help me to solve this issue?

Thanks,

Gil

Hi,

Does your model have a very low face count>

Hi,

Thanks for the quick reply.

I tried various models. The last model that I tried contained 63,892 faces and 31,968 vertices.

Thanks,

Gil

Hmm that’s quite odd. Can you package up your work directory and send it to me?

Thanks for your reply, I sent you the relevant files. Let me know if encounter difficulties opening/downloading it.

Gil

Hello,

Did you get to solve this issue? If yes, then how because I am facing the exact problem while executing the binary. Thanks in advance! 🙂

Dear Nghia,

Thank you very much for the sharing.

My goal is to understand the algorithms. I feel good with the code structure and with the concept of LBP. But in the details, i do not see where the color is affected to the mesh point, especially in AssignTexture function. Could you, please, help clarify my understanding.

By the way, could you provide the mathematical foundation of this algo.

Alvaro

Many Thanks

The LBP stuff is in LoopyBP.cpp, specifically function DATA_TYPE SmoothnessCost(int i, int j). It returns 0 if neighbour faces have the same camera assigned to them, else some constant.

I don’t have any mathematics associated with the algorithm. It was something I wrote on top of my head.

Hi, Nghia. Back for another question. I just compiled the CUDA version of VisualSFM and it generates multiple .patch files. In your instructions, you say to build the “mesh.ply” and place it in the visualize folder. In my case, since there are multiple patches (approximately 65 MB per) , I merged all the separate point clouds into one large point cloud before meshing. Then I ran your software. While it does finish, the textures are corrupted with trying to view in meshlab. Would you mind explaining exactly how it needs to be set up with multiple patch files?

It looks for this pattern

models/option-%04d.patch

eg.

models/option-0000.patch

models/option-0001.patch

models/option-0002.patch

When I run your code on my dataset, some reason I get the following.

I can confirm that `bundle.rd.out` contains data. Everything is generated with PMVS-2

$ TextureMesh pmvs/

Loading pmvs//bundle.rd.out …

cameras = 9

TextureMesh: main.cpp:134: int main(int, char**): Assertion `points.empty() == false’ failed.

Aborted (core dumped)

Can you suggest how can I attempt to fix this.

PS. All this works on your provided castle dataset

It reads the point from models/option-0000.patch. Check that file.

Thanks, it works now. My file was actually called pmvs_options.txt. Just had to rename.

Hey, nice piece of software. Was wanting to ask if there is a published paper about your method? How can I know the details of Loopy Belief Propagation method?

I didn’t follow any paper, I thought was an obvious idea at the time. I learnt some of the Loopy Belief stuff from Coursera. Have a read of my loopy belief propagation tutorial for stereo vision for a quick intro.

ok, Thanks will follow your tutorial. And nice blog I must say..!

Hello, can the viewmesh rotate the object? Wasd only zoom in and out and left and right pan, what should I do?

Late reply. Use wasd + the mouse to walk around the object.

Hi,Nghia Ho when it is runing,the error occurs:

terminate called after throwing an instance of ‘std::bad_alloc’

why?

Mostly likely ran out of memory.

Hi Nghia,

Can i check with you, have you worked on automating the process using meshlabserver? Also how do you go about working on the bundler.out file and the list files to conduct a uv texture mapping?

I’ve tried using Meshlab server to automate some processing a long time ago but I don’t remember the details. So can’t help you here.