Last Updated on July 26, 2013 by nghiaho12

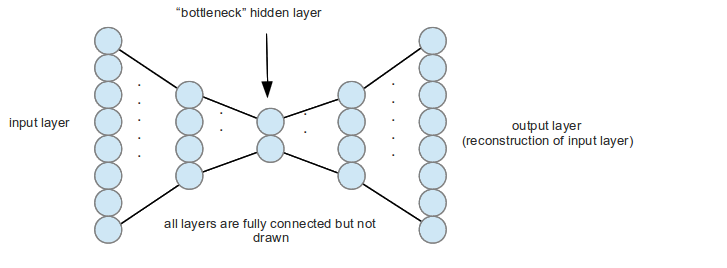

I’ve just finished the wonderful “Neural Networks for Machine Learning” course on Coursera and wanted to apply what I learnt (or what I think I learnt). One of the topic that I found fascinating was an autoencoder neural network. This is a type of neural network that can “compress” data similar to PCA. An example of the network topology is shown below.

The network is fully connected and symmetrical, but I’m too lazy to draw all the connections. Given some input data the network will try to reconstruct it as best as it can on the output. The ‘compression’ is controlled mainly by the middle bottleneck layer. The above example has 8 input neurons, which gets squashed to 4 then to 2. I will use the notation 8-4-2-4-8 to describe the above autoencoder networks.

An autoencoder has the potential to do a better job of PCA for dimensionality reduction, especially for visualisation since it is non-linear.

My autoencoder

I’ve implemented a simple autoencoder that uses RBM (restricted Boltzmann machine) to initialise the network to sensible weights and refine it further using standard backpropagation. I also added common improvements like momentum and early termination to speed up training.

I used the CIFAR-10 dataset to train 100 small images of dogs. The images are 32×32 (1024 vector) colour images, which I converted to grescale. The network I train on is:

1024-256-64-8-64-256-1024

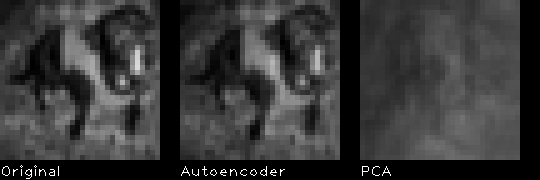

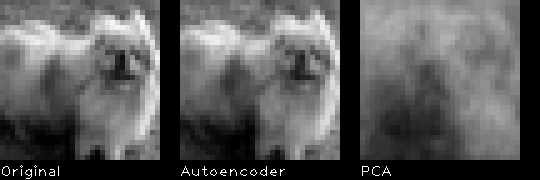

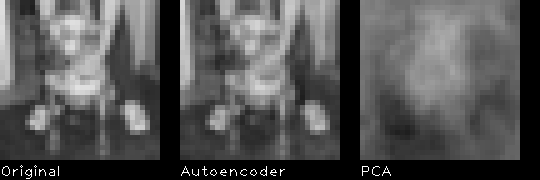

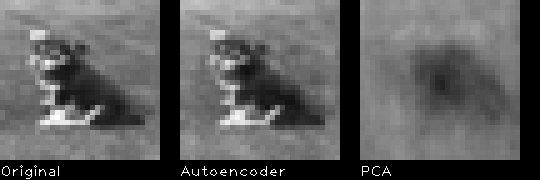

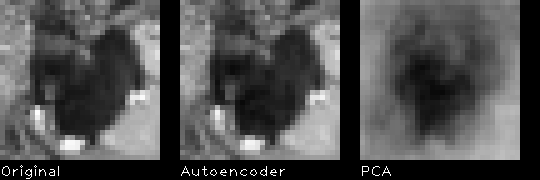

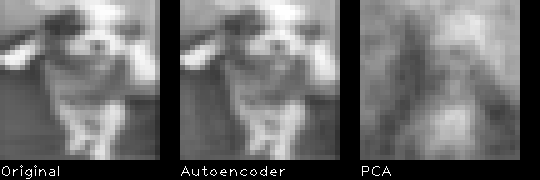

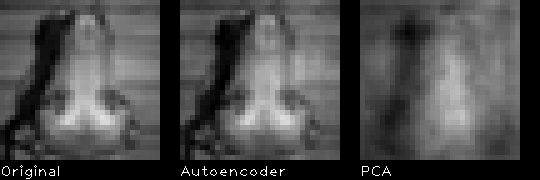

The input, output and bottleneck are linear with the rest being sigmoid units. I expected this autoencoder to reconstruct the image better than PCA, because it has much more parameters. I’ll compare the results with PCA using the first 8 principal components.

Results

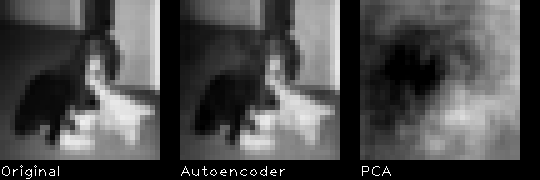

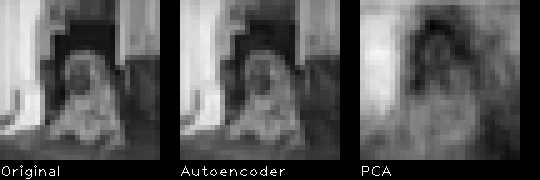

Here are 10 random results from the 100 images I trained on.

The autoencoder does indeed give a better reconstruction than PCA. This gives me confidence that my implementation is somewhat correct.

The RMSE (root mean squared error) for the autoencoder is 9.298, where as for PCA it is 30.716, pixel values range from [0,255].

All the parameters used can be found in the code.

Download

You can download the code here

Last update: 27/07/2013

You’ll need the following libraries installed

- Armadillo (http://arma.sourceforge.net)

- OpenBLAS (or any other BLAS alternative, but you’ll need to edit the Makefile/Codeblocks project)

- OpenCV (for display)

On Ubuntu 12.10 I use the OpenBLAS package in the repo. Use the latest Armadillo from the website if the Ubuntu one doesn’t work, I use some newer function introduced recently. I recommend using OpenBLAS over Atlas with Armadillo on Ubuntu 12.10, because multi-core support works straight out of the box. This provides a big speed up.

You’ll also need the dataset http://www.cs.toronto.edu/~kriz/cifar-10-binary.tar.gz

Edit main.cpp and change DATASET_FILE to point to your CIFAR dataset path. Compile via make or using CodeBlocks.

All parameter variables can be found in main.cpp near the top of the file.