Last Updated on July 30, 2020 by nghiaho12

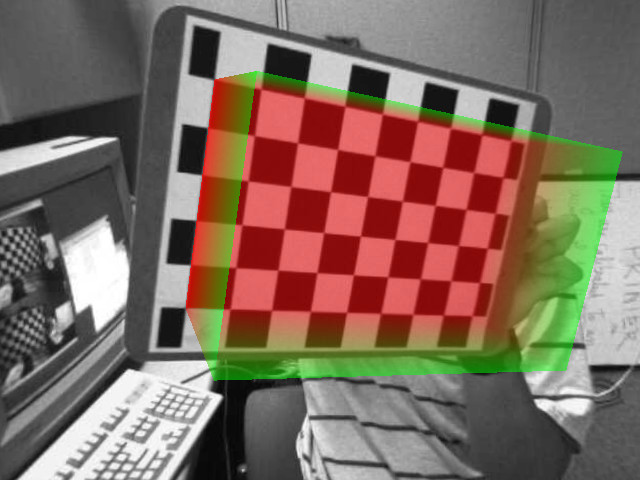

In this post I will show how to incorporate OpenCV’s camera calibration results into OpenGL for a simple augmented reality application like below. This post will assume you have some familiarity with calibrating a camera and basic knowledge of modern OpenGL.

Background

A typical OpenCV camera calibration will calibrate for the camera’s intrinsics

- focal length (fx, fy)

- center (cx, sy)

- skew (usually 0)

- distortion (number of parameters depends on distortion model)

In addition it will also return the checkerboard poses (rotation, translation), relative to the camera. With the first 3 intrinsics quantities we can create a camera matrix that projects a homogeneous 3D point to 2D image point

The 2D homogenous image point can be obtained by dividing x’ and y’ by z (depth) as follows [x’/z’, y’/z’, z’, 1]. We leave z’ untouched to preserve depth information, which is important for rendering.

The checkerboard pose can be expressed as a matrix, where r is the rotation and t is the translation

Combining the camera and model matrix we get a general way of projecting 3D points back to 2D. Here X can be a matrix of points.

The conversion to 2D image point is the same as before, divide all the x’ and y’ by their respective z’. The last step is to multiply by an OpenGL orthographic projection matrix, which maps 2D image points to normalized device coordinates (NDC). Basically, it’s a left-handed co-ordinate viewing cube that is normalized to [-1, 1] in x, y, z. I won’t go into the details regarding this matrix, but if you’re curious see http://www.songho.ca/opengl/gl_projectionmatrix.html . To generate this matrix you can use glm::ortho from the GLM library or roll out your own using this definition based on gluOrtho.

Putting it all together, here’s a simple vertex shader to demonstrate what we’ve done so far

#version 330 core

layout(location = 0) in vec3 vertexPosition;

uniform mat4 projection;

uniform mat4 camera;

uniform mat4 model;

void main()

{

// Project to 2D

vec4 v = camera * model * vec4(vertexPosition, 1);

// NOTE: v.z is left untouched to maintain depth information!

v.xy /= v.z;

// Project to NDC

gl_Position = projection * v;

}When you project your 3D model onto the 2D image using this method it assumes the image has already been undistorted. So you can use OpenCV’s cv::undistort function to do this before loading the texture. Or write a shader to undistort the images, I have not look into this.

Code

https://github.com/nghiaho12/OpenCV_camera_in_OpenGL

If you have any questions leave a comment.

Do you have the above code in python?

I don’t

Just a side note: The skew parameter is currently not accounted for in the OpenCV implementation. They only use fx, fy, cx, and cy directly and do not perform a full matrix multiplication.