A friend of mine from PunkOffice (punkoffice.com) recently hit me up and asked if I knew how to register a bunch of point clouds from his shiny Apply iPhone 11 that comes with a TrueDepth camera. He was interested in using it as a portable 3D scanner. Why yes I do! At least I did over a decade ago for my PhD. Back then I used the popular ICP (Iterative Closest Point) algorithm to do point-to-point registration. It did the job. But I wasn’t in the mood to reinvent the wheel again. So instead I searched for existing Python libraries that would get the job done with the least amount of effort. In my search I came across the Open3D library (http://open3d.org) that comes with Python bindings. This library includes file loaders for various 3D formats, a handful of registration methods, and a visualizer. Perfect!

What was meant to be a quick proof of concept eventually evolved into a mini R&D project as I sought for better registration accuracy!

The code is at the bottom of the page.

Problem we’re trying to solve

Essentially we’re trying to solve for the camera poses, that is its orientation and translation in 3D space. If we know the camera poses then creating a 3D point cloud from the individual captures is easy, we just project the 3D point out at that pose and we’re done. To do this we need to know the TruthDepth camera’s calibration and a dataset to work with.

Apple TrueDepth camera calibration

The first step was to gather any useful information about the TruthDepth camera. Apple has an API that allows you to query calibrationn data of the camera. I told my friend what I was looking for and he came back with the following

- raw 640×480 depth maps (16bit float)

- raw 640x480x3 RGB images (8bit)

- camera intrinsics for 12MP camera

- lens distortion lookup (vector of 42 floats)

- inverse lens distortion lookup (vector of 42 floats)

Some intrinsic values

- image width: 4032

- image height: 3024

- fx: 2739.79 (focal)

- fy: 2739.79 (focal)

- cx: 2029.73 (center x)

- cy: 1512.20 (center y)

This is fantastic, because it means I don’t have to do a checkerboard calibration to learn the intrinsics. Everything we need is provided by the API. Sweet!

The camera intrinsics is for a 12MP image, but we’re given a 640×480 image. So what do we do? The 640×480 is simply a scaled version of the 12MP image, meaning we can scale down the intrinsics as well. The 12MP image aspect ratio is 4032/3204 = 1.3333, which is identical to 640/480 = 1.3333. The scaling factor is 640/4032 = 0.15873. So we can scale [fx, fy, cx, cy] by this value. This gives the effective intrinisc as

- image width: 640

- image height: 480

- fx: 434.89

- fy: 434.89

- cx: 322.18

- cy: 240.03

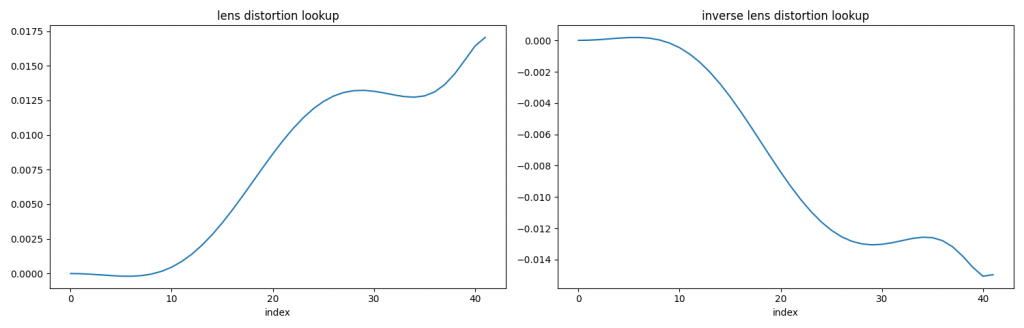

Now the distortion is interesting. This is not the usual radial distortion polynomial paramterization. Instead it’s a lookup table to determine how much scaling to apply to the radius. This is the first time I’ve come across this kind of expression for distortion. A plot of the distortion lookup is shown below

Here’s some pseudo code to convert an undistorted point to distorted point

def undistort_to_distort(x, y):

xp = x - cx

yp = y - cy

radius = sqrt(xp*xp + yp*yp)

normalized_radius = radius / max_radius

idx = normalized_radius*len(inv_lens_distortion_lookup)

scale = 1 + interpolate(inv_lens_distortion_lookup, idx)

x_distorted = xp*scale + cx

y_distorted = yp*scale + cy

return x_distorted, y_distortedidx will be a float and you should interpolate for the correct value, rather than round to the nearest integer. I used linear interpolation. To go from distorted to undistorted switch inv_lens_distortion_lookup with lens_distortion_lookup.

With a calibrated camera, we can project a 2D point to 3D as follows.

def project_to_3d(x, y, depth):

xp = (x - xc)/fx * depth

yp = (y - yc)/fy * depth

zp = depth

return [xp, yp, zp]I found these links helpful when writing up this section.

- https://frost-lee.github.io/rgbd-iphone/

- https://developer.apple.com/documentation/avfoundation/avcameracalibrationdata

Dataset

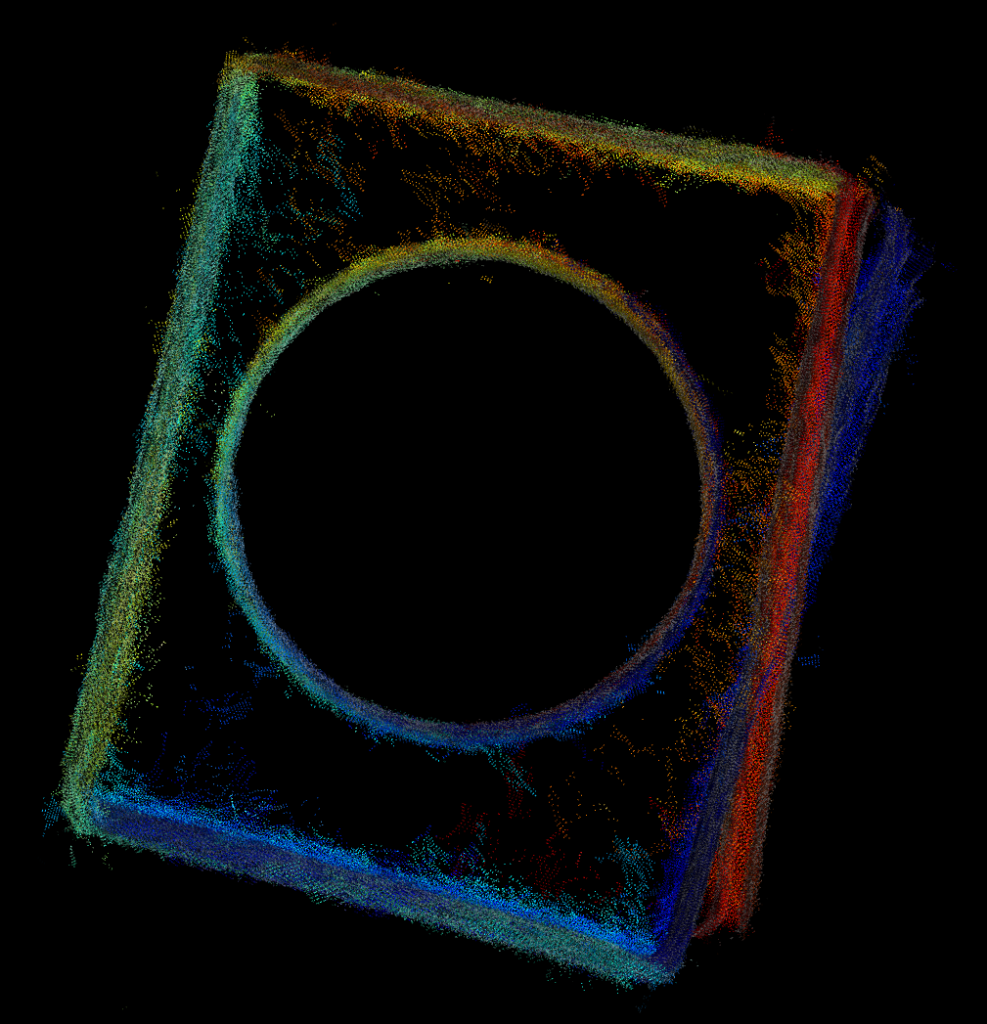

The dataset I got from my friend consists of 40 captures, which includes both RGB images and depth maps as shown below. I’m showing a gray image to save file space and I segmented the depth map to isolate the objects so you can see it better. There’s a box with a tin can on top, which are two simple shapes that will make it easy to compare results later on. The camera does a 360 or so degree pan around the object, which we’ll exploit later on as well.

Method #1 – Sequential ICP

The simplest method I could think of is sequential ICP. In this method the current point cloud is registered to the previous. I used Open3D’s point-to-plane variant of ICP, which claims better performance than point-to-point ICP. ICP requires you to provide an initial guess of the transform between the point clouds. Since I don’t have any extra information I used the identity matrix, basically assuming no movement between the captures, which of course isn’t true but it’s a good enough guess.

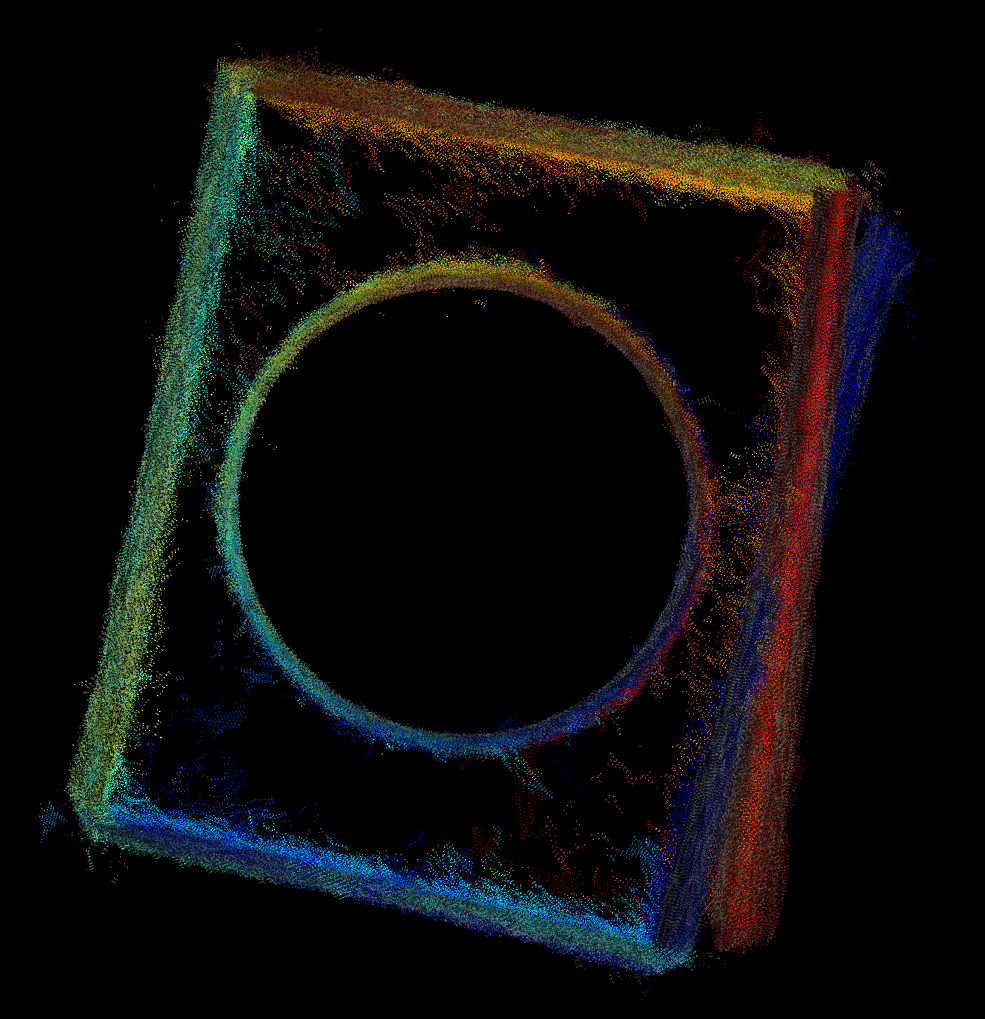

Below shows a top down view of the box and can. You can some misalignment of the box on the right side. This will be our baseline.

Method #2 – Sequential vision based

There are two drawbacks with the sequential ICP approach. First, using 3D points alone can not resolve geometric ambiguities. For example, if we were to capture a sequence of a flat wall, ICP would struggle to find the correct transform. Second, ICP needs an initial transform to boot strap the process.

To address these shortcomings ‘ll be using both the image and depth data. The image data is richer for tracking visual features. Here is an outline the approach

1. Track 2D SIFT features from current to previous frame

2. Use the fundmanmetal matrix to filter out bad matches

3. Project the remaining 2D points to 3D

4. Find the rigid transform between current and previous 3D points

We now have a good estimate for the transform between camera poses, rather than assume identity transform like with ICP!

The advantage estimating the transform in 3D is it’s much easier and possibly than traditional 2D approaches. The rigid transform can be calculated using a closed form solution. The traditional 2D approach requires calculating the essential matrix, estimating 3D points, solving PnP, … And for a monocular camera it’s even trickier!

Here’s the result of the sequential vision based approach

Hmm no noticeable improvement. Sad face. Still the same problem. Fundamentally, our simplistic approach of registering current to previous introduces a small amount of drift over each capture.

Oh fun fact, the SIFT patent expired some time early 2020! It got moved out of OpenCV’s non-free module and is now back in the features2d module.

Method #3 – Sequential vision based with loop closure detection

To reduce the effect of drift and improve registration we can exploit the knowledge that the last capture’s pose is similar to one of the earlier capture, as the camera loops back around the object. Once we find the loop closure match and the transform between them we can formulate this as a pose graph, plus feature matching constraints, and apply an optimization. Pose graph optimization is commonly used in the robotics community to solve for SLAM (simultaneous localization and mapping) type of problems. There are 3 main ingredients for this optimization

1. define the parameters to be optimized

2. define the cost function (constraint)

3. provide good initial estimates of the parameters

As mentioned earlier, we’re interested in solving for the camera poses (rotation, translation). These are the parameters we’re optimizing for. I chose to represent rotation as a Quaternion (4D vector) and translation as a 3D vector (xyz). So a total of 7 parameters for each camera. It’s also common to represent the rotation using an axis-angle parameterization, such that it’s a 3D vector. This will be faster to optimize but there are singularities you have to watch out for. See http://www.euclideanspace.com/maths/geometry/rotations/conversions/matrixToAngle for more insight. The Quaternion doesn’t have this problem, but requires the solver to make sure the vector is unit length to be a valid rotation vector.

For the cost function, given the current parameters, I want to minimize the Euclidean distance between the matching 3D points. Here’s a pseudo code for the cost function

def cost_func(rot1, trans1, rot2, trans2, pts1, pts2):

# rot1, rot2 - quaternion

# trans1, trans2 - 3D translation

# pts1, pts2 - matching 3D point

pts1_ = rotate_point(rot1, pts1) + trans1

pts2_ = rotate_point(rot2, pts2) + trans2

residuals[0] = pts1_.x - pts2_.x

residuals[1] = pts1_.y - pts2_.y

residuals[2] = pts1_.z - pts2_.z

return residualsWe add a cost function for every 3D match. So this includes all the current to previous matches plus the loop closure match. The optimization algorithm will adjust the parameters iteratively to try and make the loss function approach zero. A typical loss function is loss = sum(residuals^2), this is the familiar least squares.

Pose graph optimization uses a non-linear optimization method, which requires the user to provide a good estimate of the parameters else it will converge to the wrong solution. We will use approach #2 to initialize our guess for the camera poses.

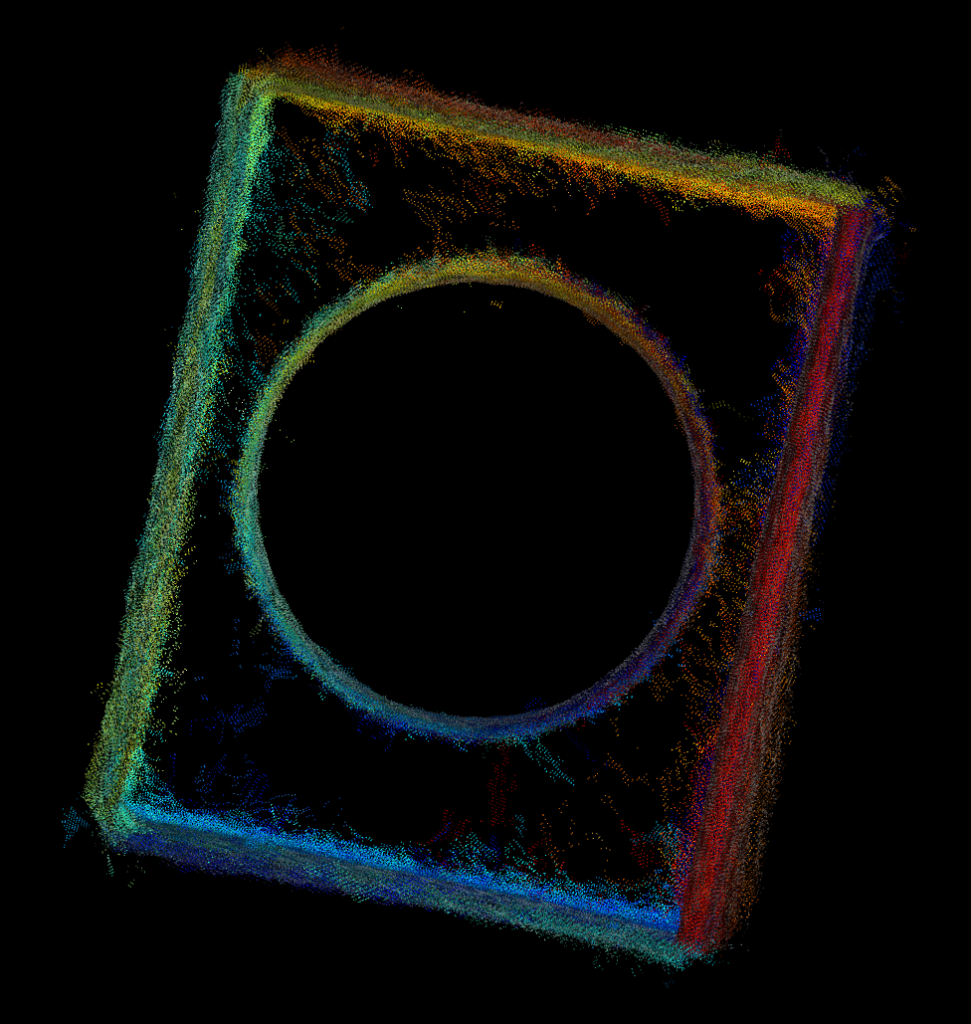

I used Ceres Solver to solve the pose graph. The results are shown below.

All the box edges line up, yay!

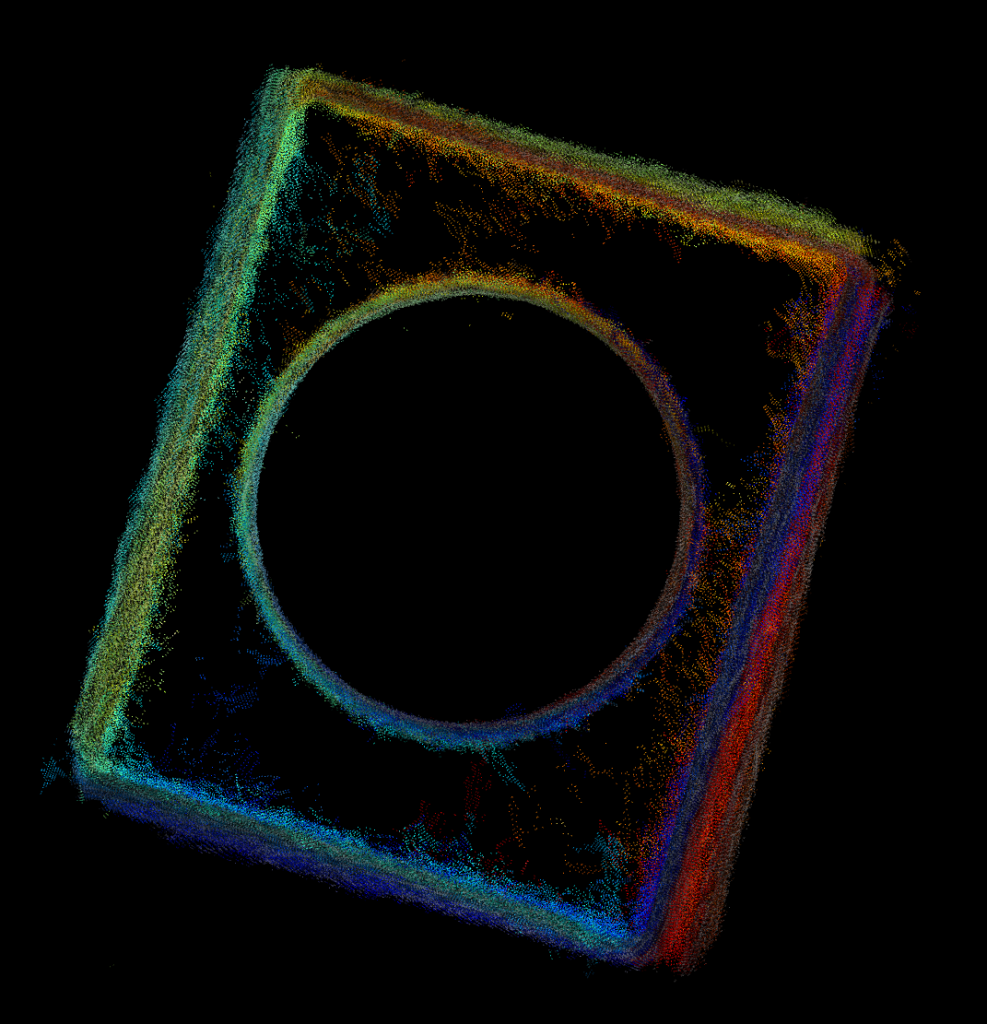

Method #4 – Sequential ICP with loop closure detection

Let’s revisit sequential ICP and see if we can add some pose graph optimization on top. Turns out Open3D has a built in pose graph optimizer with some sample code! Here’s what we get.

Better then vanilla sequential ICP, but not quite as good as the vision based method. Might just be my choice in parameters.

More improvements?

We’ve seen how adding loop closure to the optimization made a significant improvement in the registration accuracy. We can take this idea further and perform ALL possible matches between the captures. This can get pretty expensive as it scales quadratically with the number of images, but do-able. In theory, this should improve the registration further. But in practice you have to be very careful. The more cost functions/constraints we add the higher the chance of introducing an outlier (bad matches) into the optimization. Outliers are not handled by the simple least square loss function and can mess things up! One way to handle outliers is to use a different loss function that is more robust (eg. Huber or Tukey).

You can take the optimization further and optimize for the tracked 3D points and camera intrinsics as well. This is basically bundle adjustment found in the structure from motion and photogrammetry literature. But even with perfect pose your point cloud is still limited by the accuracy of the depth map.

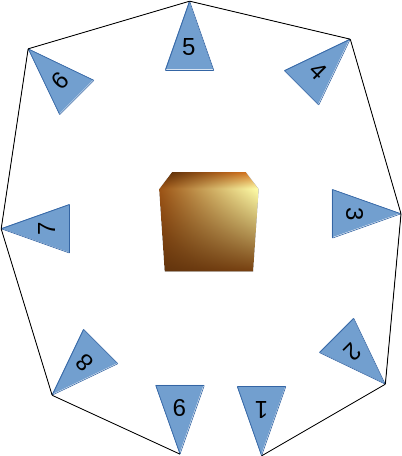

Summary of matching strategies

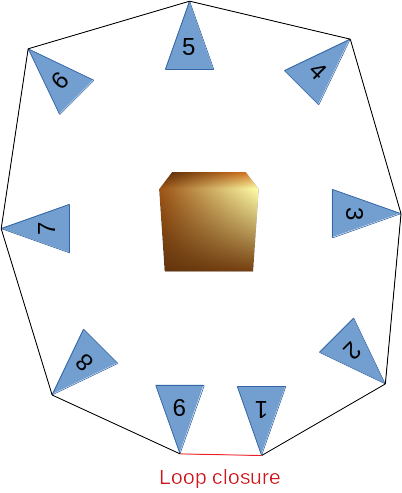

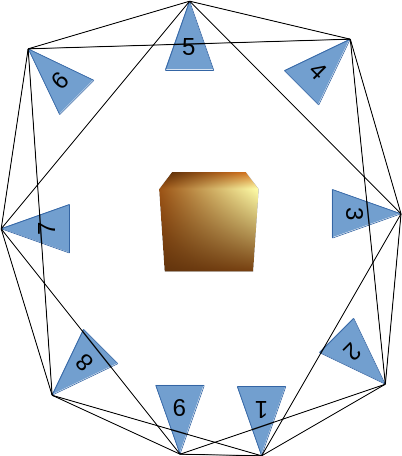

Here’s a graphical summary of the matching strategies mentioned so far. The camera poses are represented by the blue triangles and the object of interest is represented by the cube. The lines connecting the camera poses represents a valid match.

Sequential matching

Fast and simple but prone to drift the longer the sequence. Matching from capture to capture is pretty reliable if the motion is small between them. The vision based should be more reliable than ICP in practise as it doesn’t suffer from geometric ambiguities. The downside is the object needs to be fairly textured.

Vision based sequential matching with loop closure detection

An extension of sequential matching by explicitly detecting where the last capture loops back with an earlier capture. Effectively eliminates drift. It involves setting up a non-linear optimization problem. This can be expensive if you have a lot of parameters to optimize (lots of matched features). The loop closure detection strategy can be simple if you know how your data is collected. Just test the last capture with a handful of the earlier capture (like 10), and see which one has the most features matched. If your loop closure detection is incorrect (wrong matching pair of capture) then the optimization will fail to converge to the correct solution.

Finding all matches

This is a brute force method that works even if the captures are not ordered in any particular way. It’s the most expensive to perform out of the three matching strategies but can potentially produce the best result. Care must be taken to eliminate outliers. Although this applies to all method, it is more so here because there’s a greater chance of introducing them into the optimization.

Alternative approaches from Open3D

Open3D has a color based ICP algorithm (http://www.open3d.org/docs/release/tutorial/Advanced/colored_pointcloud_registration.html) that I have yet to try out but looks interesting.

There’s also a 3D feature based registration method that doesn’t require an initial transform for ICP but I haven’t had much luck with it.

http://www.open3d.org/docs/release/tutorial/Advanced/global_registration.html

Meshing

Open3D also includes some meshing functions that make it easy to turn the raw point cloud to a triangulated mesh. Here’s an example using Poisson surface reconstruction. I also filter out noise by only keeping the largest mesh, that being the box and tin can. Here it is below. It even interpolated the hollow top of the tin can. This isn’t how it is in real life but it looks kinda cool!

Final thoughts

Depending on your need and requirement you should always advantage of any knowledge of how the data was collected. I’ve shown a simple scenario where the user pans around an object. This allows for some simple and robust heuristics to be implemented. But if your aiming for something more generic, where there’s little restriction on the data collecting process, then you’re going to have to write more code to handle all these cases and make sure they’re robust.