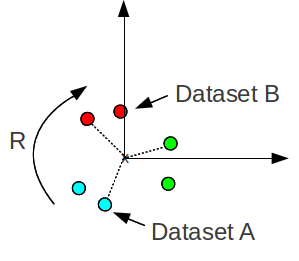

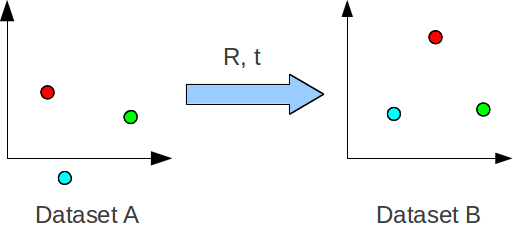

Finding the optimal/best rotation and translation between two sets of corresponding 3D point data, so that they are aligned/registered, is a common problem I come across. An illustration of the problem is shown below for the simplest case of 3 corresponding points (the minimum required points to solve).

The corresponding points have the same colour, R is the rotation and t is the translation. We want to find the best rotation and translation that will align the points in dataset A to dataset B. Here, ‘optimal’ or ‘best’ is in terms of least square errors. This transformation is sometimes called the Euclidean or Rigid transform, because it preserves the shape and size. This is in contrast to an affine transform, which includes scaling and shearing.

This problem arises especially in tasks like 3D point cloud data registration, where the data is obtained from hardware like a 3D laser scanner or the popular Kinect device.

Solution overview

The solution presented will work with both noise free and noisy data. A minimum of 3 unique points is required for a unique solution.

For noise free data it will solve for

If the data is noisy it will minimize the least squares error

Where A and B are sets of 3D points with known correspondences. R is a 3×3 rotation matrix and t is the translation vector (technically matrix Nx3).

Finding the optimal rigid transformation matrix can be broken down into the following steps:

- Find the centroids of both dataset

- Bring both dataset to the origin then find the optimal rotation R

- Find the translation t

Finding the centroids

This bit is easy, the centroids are just the average of the points and can be calculated as follows:

and

are 3×1 vectors eg.

Finding the optimal rotation

There are a few ways of finding optimal rotations between points. The easiest way I found is using Singular Value Decomposition (SVD), because it’s a function that is widely available in many programming languages (Matlab, Octave, C using LAPACK, C++ using OpenCV …). SVD is like this powerful magical wand in linear algebra for solving all sorts of numerical problems, but tragically wasn’t taught when I was at uni. I won’t go into details on how it works but rather how to use it. You only need to know that the SVD will decompose/factorise a matrix (call it E), into 3 other matrices, such that:

If E is a square matrix then U, S and V are the same size as well. We’re only interested in square matrices for this problem so I won’t go into detail about rectangular ones.

To find the optimal rotation we first re-centre both dataset so that both centroids are at the origin, like shown below.

This removes the translation component, leaving on the rotation to deal with. The next step involves accumulating a matrix, called H, and using SVD to find the rotation as follows:

H is the familiar covariance matrix. is an operation that subtracts each column in

by

.

At this point you may be thinking, “what the, that easy?!”, and indeed you would be right. One thing to be careful is that you calculate H correctly. It should end up being a 3×3 matrix, not an NxN matrix. Pay close attention to the transpose symbol. It’s doing a multiplication between 2 matrices where the dimensions effectively are, 3xN and Nx3, respectively. The ordering of the multiplication is also important, doing it the other way will find a rotation from B to A instead.

Special reflection case

There’s a special case when finding the rotation matrix that you have to take care of. Sometimes the SVD will return a ‘reflection’ matrix, which is numerically correct but is actually nonsense in real life. This is addressed by checking the determinant of R (from SVD above) and seeing if it’s negative (-1). If it is then the 3rd column of V is multiplied by -1.

if determinant(R) < 0

[U,S,V] = svd(R)

multiply 3rd column of V by -1

R = V * transpose(U)

end if

A big thank you goes to Klass Jan Russcher for the solution.

Finding the translation t

Now that we have solved for R we can solve for t. Plugging the centroids into the equation mentioned at the start we get

And we’re done!

Note on usage

The solution presented can be used on any size dataset as long as there are at least 3 points. When there are more than 3 points a least square solution is obtained.

Code

https://github.com/nghiaho12/rigid_transform_3D

Reference

- https://en.wikipedia.org/wiki/Kabsch_algorithm

-

“Least-Squares Fitting of Two 3-D Point Sets”, Arun, K. S. and Huang, T. S. and Blostein, S. D, IEEE Transactions on Pattern Analysis and Machine Intelligence, Volume 9 Issue 5, May 1987

Great tutorial, you did it very easy to understand 😉 I only have a question. Why do we need to multiply t_new*R_new*C_A instead of t_new*R_new only? Thanks a lot!

Thanks for the feedback. To answer your question, the reason is because the rotation, R, calculated earlier assumes the points centroid to be at (0,0,0), hence the extra C_A (which brings points A centroid to the origin). If you read the matrix multiplication from right to left, it is doing the following steps in this order

1. translate point A’s centroid to the origin

2. rotate point A

3. translate point A’s centroid to point B’s centroid

this seems almost correct, however i think that t_new should actually just be the affine matrix that shift by the centroid of cloud B, not the difference of the centroids.

Thanks for spotting the error out! I had a look at the Octave script I wrote and turns out I implemented it correctly but described it in incorrectly in the post 🙂

could you please elaborate how to find affine matrix and why nit subtarct. TIA

This is outside the scope of this post. Instead I refer you to https://docs.opencv.org/3.4/d4/d61/tutorial_warp_affine.html.

I have reconstructed a scene using a collection of images. The original scene has 100 points and the reconstructed scene has 100 points. I tried finding the rotation between the two scenes but the function did not work. It returns the exact same points?

How are you implementing it? Can I see the points in a text file?

Sorry I made a mistake in my test code! It’s working perfectly fine! Thanks for this, saved a lot of time for me (“,)

Glad it worked out !

how did you fix the problem ?

Hi, could you please give me the relevant references for this function so I can go through it? Thanks

Check the paper ‘A Method for Registration of 3-D Shapes’, by Besl and McKay, 1992.

Or a more a more understandable paper find under “Least-Squares Fitting of Two 3-D Point Sets” by K.S Arun et. alii (1987 )

Here tree algorithms for matching two point clouds are explained: SVD, Quaternion as well as an Iterative method.

THANKX’S

I took A and B as same point set and try to find out the transformation matrix. I am expecting transformation matrix like T = [1 0 0 0; 0 1 0 0; 0 0 1 0; 0 0 0 1].

%Code

A = [1 2 3; 4 5 6; 7 8 9];

B = A;

T = rigid_transform_3D(A, B);

I got the transformation matrix like:

T=[-0.3333 0.6667 0.6667 -2.0000

0.6667 -0.3333 0.6667 -0.0000

0.6667 0.6667 -0.3333 2.0000

0 0 0 1.0000]

Is it correct or I am getting something wrong?

Hi,

If you do T*[A ones(3,1)]’, you will get B, which is mathematically correct. But your input is degenerate. If you do rank(A) you’ll get 2 (instead of 3), so there are infinite solutions.

I want the transformation matrix T, not R and T. How do I do that?

Context?

Hi,

Thanks for this tutorial. However, it seems that this method is only limited sets of 3D data viewed same plane.

If I had two cameras at different angles viewing the same three 3D points on a model helicopter, how would I go about finding rotation and translation? Since the triangles wouldn’t be the same shape, would that be an affine transform?

Thanks

Hi,

The diagrams might be a bit misleading but it’s actually meant to be 3D space. I used this technique to align 3D data from a laser scanner.

THANKS! But if there is another matrix to be sovled? For example, deformation matrix. From A to B, firstly rotate,then translate, last deform, how can I get 3 matrixs respectively through 2 sets of points?

@YeMeitu were you able to resolve this? I am trying to do the same thing

Hi,

Ive been trying to make work your matlab code with a rotated image by 30 degree (only rotation no translation).

I use a SIFT algorithm to detect common feature points in both images and then I apply your script (with those points as the input points) to try to get the applied rotation (30deg) and I get that a traslation has been applied as well. Im getting the following matrix:

T =

0.86651 -0.49916 0 79.111

0.49916 0.86651 0 -44.818

0 0 -1 0

0 0 0 1

Some of the input points look like this:

A =

23.883 166.05 0

65.38 67.402 0

102.56 229.35 0

103.26 206.88 0

104.89 198.93 0

129.65 116.17 0

135.97 171.27 0

138.26 177.16 0

B =

16.976 110.74 0

102.47 47.257 0

53.48 204.81 0

65.257 185.77 0

70.537 179.81 0

133.41 120.39 0

111.33 171.34 0

110.47 177.29 0

Could you also please explain how to get the rotated angle from the matrix.

If you could help me I would really appreciated, ive been fighting with it for hours =)

thanks!

Nico

First make sure you’re using my latest code. You have a -1 at T(3,3), which looks like the result from the old code. It should be 1. For 2D rotation it’s super simple, the angle is just

rotation = acos(T(1,1)) or

rotation = asin(T(2,1)) or

rotation = atan(T(2,1) / T(1,1))

I can’t remember if the first 2 takes care of the sign correctly, pretty sure the 3rd one will. Have a look at this page

http://www.cs.mtu.edu/~shene/COURSES/cs3621/NOTES/geometry/geo-tran.html

to see how the matrix elements are related to angles.

Thanks! Ill try then first the latests version of your code. Btw, where do I get it? Ive just seen only 1 Matlab link above, in the Code section, the .m file.

Nico

It was “quietly” updated around Jan 2013. There’s an additional 3 lines of code to check for the determinant being -1.

Ok, so Ive been using the latest version then.

Still getting than undesired traslation in T…

Could you please use the upper A and B points and paste how the matrix T you obtain is? Im using Matlab and Photoshop to rotate the Image points so Im pretty sure those points have only been rotated, no translated..

thanks!

Nico

I assume photoshop rotates from the image centre, so the translation component reflects this. If there is no translation, then it rotates at (0,0), which is the top-left corner of an image instead.

Can you paste me your commands if you still think this is not right?

This is your Matlab function I use:

function T = rigid_transform_3D(A, B)

if nargin ~= 2

error(‘Missing parameters’);

end

% Skipping error checking of input to reduce code clutter

centroid_A = mean(A);

centroid_B = mean(B);

H = zeros(3,3);

for i=1:size(A,1)

H = H + ( A(i,:) – centroid_A )’ * ( B(i,:) – centroid_B );

% NOTE: the transpose is on A, different to my tutorial due to convention used

end

[U,S,V] = svd(H);

if det(V) < 0

V(:,3) = V(:,3) * -1

end

R = V*U';

C_A = eye(4,4);

C_B = eye(4,4);

R_new = eye(4,4);

C_A(1:3, 4) = -centroid_A';

R_new(1:3, 1:3) = R;

C_B(1:3, 4) = centroid_B;

T = C_B * R_new * C_A;

I mean with matrix A, B, so I can copy and paste into my Octave.

These points correspond to 2 takes of the same image, so the 2nd ones might be slightly rotated and translated, but very few pixels:

A =

50.93 204.53 0

87.188 1279.8 0

237.19 600.8 0

378.9 1601.8 0

458.8 82.172 0

B =

54.005 204.5 0

90.51 1282.2 0

240.72 600.69 0

382.59 1601.9 0

462.01 81.683 0

As you see, the matrix T outputs a rotation of 90 degrees. I dont understand it…

T =

1 0.00049764 0 2.9901

-0.00049764 1 0 0.49973

0 0 -1 0

0 0 0 1

Rotation of the 2nd Image in relation to the Original: -89.97148760 degrees

rotation = acos(T(1,1)) = acos(1) = 0 degrees

Ah OK. I was doing it as I think you suggested before

rotation = atand( T(1,1) / T(2,1) );

Oh yeah that was a mistake 🙂 it’s suppose to be atan(T(2,1), T(1,1))

These points correspond to the same image, but the second one is rotated by 35deg in PS. So, in theory, the 2nd ones should be rotated, no translated at all:

A =

18.923 36.453 0

56.503 107.54 0

66.38 68.402 0

107.61 121.48 0

130.65 117.17 0

B =

38.848 205.02 0

110.37 241.82 0

96.319 204.11 0

160.84 222.91 0

176.75 207.01 0

As you see, the matrix T outputs a rotation of -54 degrees and a translation of x= 2.5px and y=186px.

T =

0.81716 0.57641 0 2.5138

-0.57641 0.81716 0 186.27

0 0 -1 0

0 0 0 1

Rotation of the 2nd Image in relation to the Original: -54.80169497 degrees

rotation = acos(T(1,1)) = acos(0.81716) -> 35.198 degrees

=)

thanks.

but still, it suggests a translation of x= 2.5px and y=186px right?

That translation is combination of rotation + 2 other translation. Have a look in the script. There is a variable called ‘t’ (small t). That’s probably what you are looking for.

Ive checked, there is not ‘t small’ in your Matlab rigid_transform_3D function , where should it be?

i really appreciate your help, thanks

line 43:

t = centroid_B – centroid_A

Ill add that line to the Matlab version since it doesnt have it

are the ‘t small’ variables in pixels or mm?

It’s whatever unit your data is.

Thanks, appreciate it.

Firstly, thank you very much for all this work – it’s really helped me!

Secondly, I’m working with this and having lots of problems with the special reflection case. I’ve found that looking at the determinant on V doesn’t always spot the problem. Is it more appropriate to look at the determinant of the rotation matrix, R? This is det(R) = det(U)*det(V) – remembering that the determinant is equal to that of the transpose. I noticed that det(U) was -1 and det(V) was 1 for a reflection case.

Thirdly, many thanks in advance for any further help you can provide!

Kind regards,

Nick

Not sure on this one myself. Can can post the data points that fail?

Not sure on this one myself. Can you post the data points that fail?

A = [-30.3200 20.7500 0 0;

0 0 -27.0300 33.4500;

-7.5000 -7.5000 -7.5000 -7.5000];

B= [-33.4243 17.2760 -3.3237 1.7730;

47.8792 42.1348 32.9578 62.1567;

10.8745 11.6758 -12.4699 40.0464];

I get det(V) = 1 and det(U) = -1.

The solution appears as a reflection – the signs of rotations come out all wrong and the

origin comes out on the wrong side of the centroid.

The B matrix is measured and has lots of noise on it.

I changed my code to:

if det(V)*det(U) < 0

V(:,3) = -V(:,3) ;

disp('SVD Special Reflection Case!')

end

This seems to have sorted it, but I've got lots more testing to do…

Cheers,

Nick

That’s very interesting. Let me know how you go and if you find a solution I’ll update the page.

I’ve just been reading about how the determinant of a transformation indicates whether the transformation preserves orientation.

This was helpful: http://mathinsight.org/determinant_linear_transformation

Basically, if det(T) is negative then the orientation has been flipped. For this to happen, det(R) must be negative; which means that if det(V) or det(U) are negative exclusively, then there will be a flip in orientation giving the reflection case.

Changing the sign of the 3rd column of V corrects this by changing the direction cosines that make up R to a right handed set rather a left handed set; this is such that R(:,3) = cross(R(:,1),R(:,2)) and not cross(R(:,2),R(:,1)).

I think this has solved it, thanks to you and Klass Jan Russcher. I wouldn’t have landed on this if not for you guys (and having some sort of (good / bad / delete as appropriate) luck with my datasets).

Cheers,

Nick

Thanks for the link. So what was your final solution?

My solution was:

if det(R) < 0

R(:,3) = -R(:,3) ;

end

This guarantees that det(T) will be positive, as the relative positions of the centroids have no impact on this.

Updated the page with your solution 🙂

Hi Nghiaho and Nick i have implemented your method using Unity and ARCore. Thanks a ton it helps me a lot. I had a problem though. When i implemented reflection case by just multiplying the third column of R by -1 sometimes my points flip in wrong direction. It took me a while to figure out it is caused by reflection case. I changed implementation to multiplying third column of V by -1 and recalculating the R, it worked like a charm. I thought you might want to know. Thanks again!

Hi Ali,

I too am working on ARCore and am in need of this method. I saw your comment on StackOverflow and found this page.

Highly appreciated 😀 I was lost otherwise.

I have a question in regards to the accuracy, i.e. the rmse. I seem to be getting “okayish” results but I am confident that I am using the same points across both ARCore session, but I never get optimal results. “Everything looks good!” is never printed

I select the same 5 points – AugmentedImage centre, and the 4 corners of the image, whose 3D co-ords I get through hitTest by clicking on the screen of my mobile.

Any idea how I could improve this?

The tolerance I used for my test case is 1e-5, which may be unrealistic if you’re using measured points subjected to noise. If you’re dealing with pixels, the tolerance is probably more like 0.5 pixel or so.

Hi nghiaho12,

thanks for your reply. After a couple of tests, I did find that I get an accuracy of around 0.05 or so. And this is pretty accurate for my use case 🙂

Any suggestions of how this accuracy could be improved? Just food for thought..

Increase the number of points that are taken to calculate R and t?

Assuming you have simple noise on your measurements then increasing the points will help produce a more accurate R, and t. You might not see much change in the RMSE though. The only way to decrease that really is if your measurements are more accurate (eg. sub-pixel).

Hi,

I read your explanation and it was fabulous. But I am having some difficulty in using the code. Could you please specify how to specify the points? My doubt is that say X is the set of 3 points and Y is the same set after applying R and T. How can I get Y back from X, R and T?

I have been using Y1=R*X+repmat(T,1,3), but after this Y1 and Y are different. Why does this happen, where I am going wrong?? Please help.

I’ll definitely modify the code to add better documentation soon. Here’s a working example using random points generated. Actually, somehow I accidentally omitted this code in the Matlab file.

R = orth(rand(3,3)); % random rotation matrix

if det(R) < 0 R(:,3) *= -1 end t = rand(3,1); % random translation n = 10; % number of points A = rand(n,3); B = R*A' + repmat(t, 1, n); B = B'; T = rigid_transform_3D(A, B); A2 = T * ([A ones(n,1)]'); A2 = A2'; A2 = A2(:,1:3); % remove the 1's added earlier % Find the error err = A2 - B; err = err .* err; err = sum(err(:)); rmse = sqrt(err/n); disp(sprintf("RMSE: %f", rmse)); disp("If RMSE is near zero, the function is correct!");

thanks a tonne!!!…its working perfectly now….tho I have one more doubt….what if i give it 2 sets of 3 random points as input….what u have done is that u have calculated B from A(which is random) using R and T….I am asking what if I make both A and B random….I did try this but I found that the RMSE error was greater,,,about 0.15…is it justified???

Yep absolutely. If A and B can’t be aligned “perfectly” it will find the “best” it can, in terms of least squared error. Sort of like the line fitting problem.

This is a great, simple write up on a great method for registration.

I’ve implemented a similar method but wonder if you (or others) might provide insight to my next challenge. I’m honestly not quite sure if it is even possible.

I am hoping to constrain the problem a bit more to use in a machining/inspection application. In this application, I would like to limit my rotations to only one or two axes and do the same with my translations.

For limiting translation, this is simple, I think, because rotation and translation are decoupled.

Limiting rotation: It is simple to limit to one axis of rotation by removing elements of the corvariance (H) matrix to match the desired 2D rotation matrix. Limiting to two axes has presented much more issue.

Also, I am experimenting with the rotation and translation of the data about some datum other than the centroid. In certain situations, it may not be possible to translate the to the origin before rotation.

Open and curious to hear thoughts on these!

Thanks!

Ignore my last comment, misread what you wrote. What did you try to restrict the rotation matrix to 2 axes? My first thought would be to optimize for one axes after another. So R = R2*R1. But have no idea if this will work.

My first thought was to restrict terms of the H matrix to match what a 3D rotation matrix would look like for 2 axes of rotation but this method often provided reflections. I’ll give your idea a try, not sure if it is going to provide a definite best fit though. Worth a shot.

Progress on my points:

1. Limit to one axis of rotation: Just reduce the problem to 2D.

2. Change center of rotation: Do not align centroids to (0,0,0) as a first step. This method will not produce perfect alignment in most cases but does appear to work.

The main difficultly I am having is proving a best fit. While outputs can appear to look as such, I’m still not sure if I am finding an optimal solution

Could you remap your data such that each point is measured as position along and normal distance from an axis? Finding the transformation between 2 axes should only be a 5 degree of freedom operation – translation (3D) and rotation (2D). Of course, the trick is in the detail… but something like that might work.

Hmm not sure myself, I’ll have to take a long think about this one 🙂 Depending on your application, if I was to get stuck on the maths I might be tempted to *cheat* and run some non-linear optimiser eg. Levenberg-Marquardt. If both dataset can be centred to the origin, then you only have to optimise for 2 rotation variables. But where’s the fun in that hey :p

Nick-I like that idea, the 2 axis of rotation case is one which I finding that I think I will rarely see. Still, that approach seems worthwhile and I’ll try and code it up.

Nghia-I’m trying to be “elegant” for no reason other than that I really like this method and want it to work. I definitely have some “cheating” methods that will work but I think we’re on the same page as to why I don’t what to use them. 🙂

I tried an iterated aproach to combine two rotations (one per step) but this runs into issue because rotation is order dependent.

I think all I have really done at this point is to selectively reduce my problem to 2D. This works well if point correspondences are know ahead of time. I am using an iterative process of fitting with a closest point algorithm to determine corresponding point sets at each step. Even with dimensions reduction, I am seeing convergence.

Since I am faced with the same problem right now, Im curious: Did you come up with a solution?

Hi All,

I am trying to use Scanalyze app(http://graphics.stanford.edu/software/scanalyze/) implemented by Kari Pulli in Stanford University for performing global registration. As there is no user manual I am not able to figure out to use this app to perform global registration. If any of you know please let me know steps. Hi Nghia, if you have any idea please let me know.

Thanks

ravi

Came across it long time ago but never used it.

Thanks for this explanation. I am a bit confused about something.

If I have the following two sets of 4 points:

A= [-503.359 -30.668 1164.350;

-423.903 -27.747 1226.100;

-514.524 58.149 1186.300;

-389.714 65.144 1265.790;];

B= [-501.205 -30.038 1159.550;

-422.803 -27.152 1222.950;

-516.559 58.533 1191.580;

-390.953 66.119 1270.120;];

As you can see, the two sets of 4 points are very close to each other

When I run your method I get results like:

Rotation =[ 0.9987 -0.0499 0.0071;

0.0503 0.9955 -0.0798;

-0.0031 0.0800 0.9968;];

Trans = [ -8.3652, 120.3392, 1.5959];

If I compute the error as R*A+t -B, the results are indeed very good.

However, I really don’t expect to see such a large translation.

The points are really close to each other, and I would expect almost no translation…

Any insight would be helpful!

Thanks!

Yes it is a bit unintuitive why “t” is not what you expect. To see why this is the case you have to keep in mind the following operations are executed in this particular order:

1. rotation around the origin

2. translation

The R matrix rotates points around the origin eg. (0,0,0). Have a look at this PDF I quickly whipped together.

rotation_translation_smiley.pdf

In that example, the smiley face from data A and B are at the exact same position, but oriented differently. The rotation shifts the point and hence the large translation to shift it back.

I see. So the actual translation I’m after is:

T = R*Ac + t – Ac

where Ac is the centroid of A?

Maybe I should clarify my question. Given A and B our two datasets and assuming that B has rotated and translated with respect to A, how do I find the transformation matrix between the two such that

A = H*B

Thanks!

Do you mean a matrix H that combines R and t? Like say if H is a 3×4 matrix such that

H = [R | t] and B is a 3xN matrix, where the last row is all 1 ?

hi,

your code is very helpful.

still i have a question.

after running the code and obtaining the rotation matrix R, how can i extract the rotation angles Roll/Pitch/Heading ?

thank you for your help.

This page should be of help

http://nghiaho.com/?page_id=846

thank you, it was very helpfull.

Funny we were looking into a similar story, but for data points in higher (than 3) dimension.

So let X=A-centroid_A, Y=B-centroid_B

we have Y=R*X, or alternatively Y’=X’*R’

To find R’ from a regression or projection point of view you can derive

R’=inv(XX’)XY’

Other point of view than SVD, no decomposition.

Only problem is it doesn’t enforce a proper rotation matrix (orthonormal).

This is how I initially tried to solve this too. I realized that this R found by the least squares approach wouldn’t be orthonormal, so I tried to force it orthonormal using SVD.

Am = A – centroid_A

Bm = B – centroid_B

R_ls = inv(Am) * Bm

[U,S,V] = svd(R_ls)

R = U*V’

but I kept getting strange rotations. Is there a reason we can’t force the least squares solution to be orthonormal?

This one is best left to the mathematicians 🙂

Using the inverse as above will return a general 3×3 matrix that consists of rotation + scaling + shearing + others(?). Recovering just the rotation is probably not straight forward. I’ve once had a similar question about recovering rotation from a general 2D affine transform. From my brief investigation it seems to be possible but under some circumstances only.

Hello,

I think you have a little mistake in your implementation. In case of reflection the optimal rotation must be recomputed:

if determinant(R) < 0

multiply 3rd column of V by -1

recompute R

end if

The next paper may be of interest “Estimating 3-D rigid body transformations: a comparison of four major algorithms”

Thank you for this extremely clear and helpful article.

Regards!

Oops, thanks for that 🙂

Hello,

your code is very helpful and im using it to find the rotation angles on a moving platform.

i am performing this caculation for a long time series data, lets say i have 3000 measuring epochs and i calculate the rotation angle for every epoch (doing the same calculation 3000 times).

my question is if its possible to calculate the accuracy of those rotation angles?

if there is, how?

at the end i need to have, for every measuring epoch, 3 rotation angle and their accuracies.

thank you for your help.

Dany

Hi,

The accuracy is going be as good as your input data, numerical inaccuracies from the code should be negligible. So I guess if you know the error from your input you should be able to propagate that information through. Not sure exactly how on top of my head though.

ok,

thank you.

Hi !

I really like your method and its implementation, simple and quite efficient !

I have one question : why is the solution it provides optimal, and above all why is it optimal in the least-square sense ? Does it have to do with SVD ?

best regards,

Lu

Hi,

Your best bet is to have a look at “A Method for Registration of 3-D Shapes” for a more deeper understanding. I just took their words for it 🙂 In general, anything that solves using SVD is minimising a least square error.

Great code. I am just wondering on how to break down the final transformation matrix. For the example with Nico was there only an assumption of rotation in the x-direction? what if there was a rotation is y direction as well? How can I obtain the rotations in all directions from the transformation matrix. I looked at the link to the tutorial but I cannot figure out how to get both the breakdown in translation and rotation given cetroid x,y,z.

i am trying to find out how good my rotation and translation are for a rigid body motion problem. how can i do this having started down the SVD path?

You can apply the transform and for each point find its nearest point and calculate the distance. Do this for every point and average them, this will give you a good idea of how good the transform is. If your points are noisy you can try the median of the distances instead of the average.

Can a covariance matrix be generated for the rotation and translation? What i am doing is implementing point to plane ICP fit.

I don’t know about getting a covariance matrix for rotation and translation. I’m familiar with point to plane but never implemented it. Isn’t the error just the shortest distance from the point to the plane?

Hi,

I like the simplicity of this code but I am having a little difficulty in understanding how to obtain the new coordinates. Given a data set A [nx3] and another set B [nx3] the code finds the transformation [4×4] matrix. How is it possible to apply this transformation matrix to data set B so that I can obtain the new coordinates?

Thanks a bunch,

Mel

The algorithm finds R (3×3 matrix) and t (3×1) vector. It is applied like so:

B2 = R*A + t

B2 should be close to B (best least square error). The ‘A’ matrix has to transpose to (3xn) to do the operation above.

Oh! I got it thank you. Also the data sets always have to match in size correct? What if you have two sets that you don’t have equal dimensions? Since you are matching based of the centroid then the number of points shouldn’t theoretically matter correct?

Yep, each set can have different number of points provided each point in A is paired up with a point in B. Some points with have more than one pairing, but that is okay.

How would I pair these? I was considering trying to code the rest of the “smaller” matrix full of zeros but since I am a newbie to matlab its a bit rough.

Typically for each point in A you find the closest point (Euclidean distance) in B and pair them up. If A is the larger matrix (eg. 10×3) and B is smaller (eg. 5×3), then you can make a B2 that is 10×3, with duplicate entries.

Oh! that makes sense. Thank you 🙂

Good Morning,

Me again and sorry for the constant commenting. I followed your advice but now I am not getting the correct transformation. I have two data sets A and B and have repeated values in the smaller matrix to match that of the larger but now the transformation is wrong. Here is the code i have used of yours with slight modification as well as the data sets used. Any suggestion?

A= [205.5,890.0,175.0;

187.5,890.0,175.0;

169.5,890.0,175.0;

169.5,870.0,175.0;

187.5,870.0,175.0;

205.5,870.0,175.0;

196.5,880.0,175.0;

178.5,880.0,175.0;

196.5,890.0,175.0;

205.5,880.0,175.0;

196.5,870.0,175.0;

187.5,880.0,175.0;

178.5,890.0,175.0;

178.5,870.0,175.0;

169.5,880.0,175.0];

B=[175.0,890.0,200.0;

175.0,890.0,187.5;

175.0,890.0,175.0;

175.0,870.0,175.0;

175.0,870.0,187.5;

175.0,870.0,200.0];

function [A_Orig,B_Transform] = auto_orientation(A, B)

%Checking user input

if nargin ~= 2

error(‘Error: missing parameters. Please review your inputs.’);

end

%Keeping Original Data Sets

Orig_A=A;

Orig_B=B;

%Finding Centroids of Data Sets

Centroid_A = mean(A);

Centroid_B = mean(B);

%Matching Matrix Dimensions

[ma,na]=size(A);

[mb,nb]=size(B);

if ma>mb

N=ceil(ma/mb);

New_B=repmat(B,N,1);

New_B=New_B(1:ma,:);

New_A=A;

elseif mb>ma

N=ceil(mb/ma);

New_A=repmat(A,N,1);

New_A=New_A(1:mb,:);

New_B=B;

else ma=mb;

New_A=A;

New_B=B;

end

%Setting up initial matrix

H = zeros(3,3);

%Computing matrix for singular value decompositon.

%Recentering A and B to remove translational component

for i=1:size(New_A,1)

H = H + ( New_B(i,:) – Centroid_B )’ * (New_A(i,:) – Centroid_A );

end

%Singular value decompostion for optimal rotation

[U,S,V] = svd(H);

%Rotation Matrix

R = V*U’;

%Checking for special cases of just reflect.

if det(R) < 0

fprintf('Reflection detected\n');

V(:,3)= -1;

R = V*U';

end

%Rotation with respect to reflection.

R = V*U';

%Computing translational portion of each Data Set

t_A = eye(4,4);

t_B = eye(4,4);

New_R = eye(4,4);

t_B(1:3, 4) = -Centroid_B';

New_R(1:3, 1:3) = R;

t_A(1:3, 4) = Centroid_A;

%Transformation Matrix

T = t_A * New_R * t_B;

%Apply homogenous coordinates to use Transformation Matrix

mb2=size(Orig_B);

B_Ones=ones(mb2,1);

B_H=[Orig_B B_Ones]';

%Applying Transformation Matrix

B_Transform=T*B_H;

B_Transform=B_Transform';

%Obtaining the 3D Coordinates for A and B

B_Transform=[B_Transform(:,1) B_Transform(:,2) B_Transform(:,3)];

A_Orig=A;

Your ‘%Matching Matrix Dimensions’ step is incorrect. For each point you need to find the closest matching point in the other set (smallest distance), not just copy and paste the points.

Oh shoot. Well back to the drawing board. Thank you so very much for helping me.

I manually selected a few corresponding points between two models and estimated rigid transformation based this SVD based method. But the result seems vary bad. Would you mind give some suggestions about this problem?

The link is here: http://www.pcl-users.org/How-to-estimate-rigid-transformaton-by-a-few-corresponding-points-td4029220.html

Thanks,

ping zhang.

See if the determinant of R is -1 or +1, if it’s -1 there’s a reflection that needs to be corrected.

Hello. I have a question..

When we use SVD to get the rotation matrix, is this matrix rz*ry*rx or other combination.

I need to know because I want to get yaw,pitch and roll using it.

Thanks

The rotation matrix ordering is depedent on how you extract the Euler anngles and not on SVD. If you use the same method as me (http://nghiaho.com/?page_id=846) then the ordering is Z*Y*X.

Hello

Thanks for your article, it helped me a lot!

I still have one question: Am I right in assuming that you can’t use this method when there’s also a (small) scaling involved (values range from 0,98 to 1,02)? What would you recommend in these cases? Do you maybe know a (scientific) publication which deals with that problem?

Thanks 🙂

Hi,

I’m not actually sure what happens if the scaling is off a bit. I suspect in practice you might be able to get away with it. I don’t know of any reference on top of my head that deals with this. Some keywords I might start with is ICP + scaling.

Thanks for your advice on the keywords, I already found some publications.

One last question: Have you ever thought about simply calculating the 4×4 transformation matrix (using homogenous coordinates) or the 3×3 transformation matrix and the translation vector with a set of linear equations?

Do you know if these two solutions are equivalent?

I originally wrote the tutorial using 4×4 matrices but later changed to 3×3 + 3×1 vector. They’re both equivalent solutions.

Thanks so much, this has helped me quite a bit. Has anyone tried this in C++ with BLAS/LAPACK/ARAMADILLO? I am giving it a shot and will post my results if it works!

Thanks for such a nice code and tutorial. I am facing some problem. Explaining properly.

I have 2 different partly reconstructed point cloud of one 3d model. There is a scaling difference between these 2 reconstruction. This scaling factor is unknown to me. Now I want to merge this 2 model. When I use your code to transform from one point cloud to another and merge both point cloud , I can see the scaling difference. I mean I can see 2 model merged but because of the scaling difference 2 structure separately visible. How can I rectify this problem so after merging there there wont be any scaling error.

I haven’t actually tried implementing this but it might be possible to find the average scaling factor via

1. Do ICP as normal

2. Re-centre the data again

3. Calculate the average scaling via: scale = length(A_i) / length(B_i). Where length(A_i), is the length of the point A_i from the origin.

4. Apply scale to A or B

5. Undo the centering

This is a pretty simple approach. I know there are more sophisticted ones out there.

Thanks for your reply.

I know the correspondence between 2 points cloud set, so i think step 1, 2, and 5 is not required. I have tried rest of the steps but did not get expected result. Let me know if I am doing it in right way, or any other thought.

If you can share any implementation of some sophisticated algo then that would be really helpful.

A visitor a while back pointed me to this paper:

“A System for Video-based Navigation for Endoscopic Endonasal Skull Base Surgergy”

There’s a bit where it details on scale estimation. I haven’t tried it though.

Many many thanks..

I was wondering if you could give me any ideas on how to register two point sets if the points were NOT corresponding? I have two spare point clouds (around 20 points), but to start I don’t know any other information, just xyz. I’ve been trying iterative closest point, and sometimes it finds ‘the’ solution, other times is gets caught in a local minimum. and sometimes the number of points in each set is not the same. thanks John

You’ve pretty much hit the limitation of the vanilla ICP, it requires a “good” initial guess. I don’t know of any good way to solve this other than brute force and some robust criteria (depends on your data).

Hi Nghia,

first of all: big thanks to you! Right now I’m doing an internship in medical image processing, and my first task is to implement the ICP. And your explanation helped me a LOT! If I had, I’d put 3 thumbs up for you.

But now my question: I’m implementing this algorithm in 2D space, thus for images. Of course my rotationmatrix always looks like this:

bla bla 0

bla bla 0

0 0 1

The problem I have now is that “special reflection case”. There are iterations where I get a rotation matrix with d<0. But multiplying the 3rd column with -1 obviously doesn't change anything. So do I just multiply the 2nd column with -1? Because I'm not that familiar with the mathematics behind it, I can't really tell if that's the right solution.

Hi,

Glad you found it useful. Ahh the dreaded reflection. I never really got to the bottom of the special reflection case, other than see the “fix” mentioned in some paper.

Try what you suggested and confirm the results visually, it “feels” right 🙂 If you still have problems let me know.

Thanks so much, this seems to work perfectly in matlab! This helped me a lot! You are the best!

😀

Hi, I think there’s a typo in your “Special reflection case” handling above. To get it to work for me, I had to use

if determinant(R) < 0

multiply 3rd column of V by -1

recompute R

end if

which is what your Matlab code actually does, but is different from either version you have in the text of this post!

Well spotted 🙂

Hi all,

First of all, thanks for the great tutorial.

I am trying to write the Kabsch code although following a slightly different procedure and trying to write it in Mathematica software. I am having some problems and I wonder if someone could put a small example with the “A” and “B” data input and then the “err” and “R” outputs (lets consider that no translation is necessary). That way I could find any mistake easily

Thanks a lot.

Hi! I am using this algorithm for some visual odometry stuff, and can’t figure out one thing, may be you can help me?

Assuming I have these two 3-d points sets, that describe the same static object in the space, which I observed with my stereo camera from different angles. So, coordinates of this points determined relative to the frame of reference of the camera. When I compute R and t for these two point clouds – I get them in cameras frame of reference. Now, how can I compute camera movement with them? As I understand, camera rotation in global frame of reference will be R^-1. But how can I get translation of the camera? It appears to be tc=R^-1 * t, but I can’t understand why! Why I should multiply vector t by R^-1?

Where did you get tc=R^-1 * t from? My initial thought is if the camera is at X=(0,0,0) then X2 = RX + t = t, hence translate only t.

Don’t forget to apply transformation from camera’s frame of reference to global one, because R and t you get it camera’s frame.

Well, just draw it for 2d case. Imagine, that we flying around the object and looking at its center, so, that its centroids in camera frame in both cases are equal. So, in camera frame of reference this object just rotates around it’s centroid.

Rc=R^-1 – this is obvious, where Rc – is camera rotation in global frame. And it looks like tc=(R^-1)x(-t). May be there is mistake, but I can not find it.

I’m going to have to solve the same problem, I’m also working on stereo vision. Except my head is a bit tired from watching the World Cup 🙂 I’ll get back to you if I see something different.

Hi everyone,

First of all, thank you for the great tutorial.

I’m looking to solve the Wahba’s problem, I got your algorithm wrote in C# working pretty well, but now I wish compute weights to some points on 3d space.

I would be glad for some help about that.

Thank you and best regards.

I’m not too familiar with that problem. But I’m also current working with 3d registration from stereo camera. So I’ll probably need to weight the points too.

Hi Ephaestus,

can you please send me the C# code of this algorithm ?Thanks a lot!

Hi Carlos,

Did you ever get the C# code? If so that would be very helpful. Thanks

Nice tutorial…..

can u also explain the same thing for Affine transform, especially for scaling matrix.

thanks anyways…

This tutorial is not applicable to affine transform. The affine includes more than just rotation and translation.

Dear sir,

Greetings!

This is rakesh from India. It really simple and interesting to learn about the rotation and translation matrix from your site. Actually i surfed a lot in internet for a couple of weeks to end up in your site.

I am doing my research on Computer Vision. I would like to emulate a touch screen using Laptop and a Projector. I will use 4 LEDs and a color tracking algorithm to estimate the camera pose and applying it to the 5 th LED which actually acts as a pointer.

So, all i will have was just a 2D data of the 4 points in camera and a 3D point of the LEDs. Can you guide me in how can i use it to estimate the rotation and translation matrix?

Regards,

Rakesh

Hi,

I don’t know what your setup is, but if all the LEDs are on a single plane then have a look at this code I wrote nghiaho.com/?page_id=57

Hi,

I am facing a similar problem but I also do have some scaling factor. In my case I already have a matrix which does the base transformation and I want to find out the rotation, translation and scaling. Could you give me any advise on how to do this?

Regards,

Damian

Hi,

Are you saying you have a transformation matrix and want to extra the rotation, translation, scaling from it? Or something else ?

Thats exactly what Im trying to do.

Great tutorial! One question about cross covariance matrix H: It should be divided by N or not? (At least this is what the original paper does in (24). “A Method for Registration of 3-D Shapes’, by Besl and McKay, 1992”)

Thanks a lot!

Germán

You don’t actually need to divide for it to work, but you can if you want to be technically correct since H is meant to be covariance.

Hi,

Thanks for teh tutorial. Is there a weighted version of the algorithm?

Thanks

There is, I just haven’t implemeneted it.

Thanks. Can you please give me some hints/references regarding its implementation?

Thanks

Not really, never implemented it before. But I guess the obvious thing to do would be to replace the average (for centroid calculation) with a weighted average. And something similar when calculating the rotation.

Hello,

What about rotation around different centers, will it invalidate the algorithm? How can we deal with that?

Thanks a lot

What do you mean? The algorithm will shift all the 3d points so their centers are at (0,0,0) when doing the calculation.

But the computed translation will no longer be valid. Will it?

You’ll have to give me a clear example of what you are thinking about.

Your original problem is that for two sets of 3D points x and y related by x=Ry+T, find R and T. Yes?

Now what if x and y are related by a rotation along some known point C=(a,b), rather than C=(0,0). What will the output R and T of your algorithm mean then?

If the centre is shifted, the T will change to reflect this. If x and y are 3d points that are related by a Euclidean transform then this algorithm will always output a valid R and T.

Thanks for the tutorial.

Would you more explain if number of points is more than 3, let’s say 8, where the least square error come to play in your formulations?

Can you be more specific? I kind of went over what the least square tries to optimize (the square distance between corresponding points).

Is there a way to find scale as well?

Never attempted it but there probably is a way.

What about a situation when the two datasets are in two different coordinate system? Would that require me to first transform one coordinate system in such a way that its origin is aligned with the other coordinate system? And only after that start calculating the the rotation and translation for the point cloud.

Or what would be the approach in this case?

That’s pretty much what this algorithm does.

Doesn’t this algorithm assume that the two coordinate systems are with the same orientation?

Nope

Is the rotation matrix and the translation vector out put from the code corresponding to the transform of the centroid A to centroid B and not the Cartesian coordinates of A to B?

It’s the cartesian of A and B

Hi, Thanks for the tutorial.

I’m having serious trouble calculating the Covariance Matrix. Every time i use your function i get a 1×1 answer for each bit making a 1*3 matrix….

Here’s my data and calculations:

Points:

A1 = 2,2,0

A2 = 4,3,0

A3 = 3,1,0

B1 = 2,-2,0

B2 = 1,-3,0

B3 = 3,-4,0

Centroids:

CA = 3,2,0

CB = 2,-3,0

(A1 – CA)*(B1 – CB)’ = 0

(A2 – CA)*(B2 – CB)’ = -1

(A3 – CA)*(B2 – CB)’ = 1

My matrix arithmetic wasn’t the best to start with and it’s been a few months since i’ve had to do it.

I tried to to make 2 3X3 matrices of (Ai – CA) and (Bi – CB)’ and then multiplied them together, but this gave an incorrect rotation matrix (i think) The rotation is a right hand 90 degree rotation of a triangle about the Z axis.

Any help would be greatly appreciated as i imagine i’ve been having a completely special moment for the past few days trying to work it out!

Best regards, Ben

Swap the transpose so you’re doing (3×1) * (1×3), instead of (1×3) * (3×1).

eg.

(A1 – CA)’*(B1 – CB)

Thanks, can you explain the reasoning behind this?

Not mathematically. I’m more of an engineer, I just implement stuff 🙂 There’s paper that I reference on the page that goes into the maths more.

A Method for Registration of 3-D Shapes’, by Besl and McKay, 1992.

No worries, Engineer myself. I did read the paper but the maths is portrayed in a ridiculously complicated manner, the same as every single paper, ever.

I used the Octave code you provided and it seems to give the answer thats inverted? again i’m most likely missing something but i’ve tested it a few times with known rotations and it always gives the answer in the opposite (diagonally opposite quadrant)

E.g if you take a 3D space and 3 points at:

A1 = 1,1,0

A2 = 2,2,0

A3 = 3,3,0

rotate them 90 degrees right handed to:

B1 = 1,-1,0

B2 = 2,-2,0

B3 = 3,-3,0

Then input these into the formula given in your last comment, or indeed the octave function, it gives the rotation / translation whatever matrix as:

R = -0 1 0

-1 0 0

0 0 1

The rotational matrix for a rotation on the Z axis is:

Cos(th) -Sin(th) 0

Sin(th) Cos(th) 0

0 0 1

This is obviously incorrect, as arcsin(-1) is not equal to arcsin(1)

And when another point is added on to the A points, say 5,5,0

its rotational counterpart [5,5,0] * R is given to be -5,5,0 rather than 5,-5,0.

This is solved by multiplying the answer by -1, therefore multiplying the rotational matrix by -1…. inverse is definitely the wrong term, but you get my point.

Thanks for the tutorial, its been a big help and i’ll have another look at the paper.

Ben

The R matrix is correct. You have to do R*[x,y,z]’ not [x,y,z]*R. So for your example in octave

A=[1 1 0; 2 2 0; 3 3 0]

R=[0 1 0; -1 0 0; 0 0 1]

R*A’

ans =

1 2 3

-1 -2 -3

0 0 0

The result from “ans” is in column order. It matches what you expect.

Hi,

thanks for that!

what can i conclude from a rmse=68? what is the max rmse that still considered as good enough?

thanks

Late reply. It depends on the scale of your points. If all your points are like between [-1,1] then 68 is an error.

Hi,

I am writing some game code, and I used this method to reconstruct the rotation matrix from a model which has been deformed by a physics simulation. (I am doing a sort of verlet-integration style sim). This gives me a very robust rigid body simulation.

The method is a bit math heavy for games work, but it worked fine.

Until, that is, I plugged in some different models, and then occasionally the SVD spits out a V matrix with a determinant of zero. This looks very odd, because the input matrix is virtually identical to one which produces a valid outcome. It could be a bug in the SVD implementation, which I did not write myself.

However, I am thinking there might be a simpler solution. If you consider a rotated cube, it would not be hard to reconstruct a 3×3 matrix by looking at the cube’s edge vectors and generating orthogonal i,j,j vectors. You could derive a rotation matrix very cheaply.

I am wondering whether this methodology could be applied to arbitrary geometry by extracting i,j and k using weighted sums of edges?

Hi, You could use the Quaternion method of Horn instead of this method. Then instead of SVD you just find the eigenvectors of a 4×4 symmetric matrix, which is about 30% cheaper than SVD of 3×3 matrix. Also you don’t have to worry about handling special cases like reflections as in the SVD method.

Check the Horn original paper:

http://people.csail.mit.edu/bkph/papers/Absolute_Orientation.pdf

A C++ implementation can be found here:

https://searchcode.com/codesearch/view/15567732/

Hello all

I’m looking for transformation parameters to align two sets of points and this function looks perfect.

For my application I need result in form of (trans.x, trans.y, trans.z, rot.x, rot.y, rot.z)

How can I calculate rotation about each axis from the rotation matrix?

Thanks

M

Try this http://nghiaho.com/?page_id=846

You are a hero for posting this!

Hi, I sent you an email to discuss how to apply this technique to my problem, which is very similar to what you described.

Thanks for the post and nice blog!

A better solution for the R matrix is as follows:

id = eye(size(H,1));

id(end) = det(V*U’);

R = V*id*U’;

Source: http://igl.ethz.ch/projects/ARAP/svd_rot.pdf

Hi there,

thanks for the tutorial. Very useful! I’m trying to implement a landmark constellation matching algorithm based on this in Python.

I downloaded your python source code and I think I may have found an error:

in line 22, the matrix implementation needs to be implemented with Numpy’s dot() function instead of the ‘*’ operand. Otherwise, you get a Nx3 marix instead of a 3×3 matrix.

Cheers and thanks for all your work!

-Silvio

There’s a comment there that says it implies the input is of type mat, and not an array.

Hi nghiaho.. your work is awesome and is helping me alot.I am working on Visual odometry and using (rigid_transform_3d.m).

But please help me. I will explain my problem in short.

1.I use your function (rigid_transform_3d.m) to find relative rotations(delta R) and relative translations(delta t) between consecutive images.

2.Thus,for a sequence of 300 images,I obtain 299 delta R and delta t.

3.now,while plotting position of vehicle, I use

pos{i}=delta_R{i-1}*pos{i-1} +delta_t{i-1};

but results are in camera coordinate system. Can you suggest me the way to convert in world coordinate system?

I remember doing a similar exercise. This is how I did it

globalR initialize to 3×3 identity, and globalT to [0;0;0]

// repeat for all images

globalR = R*globalR

globalT = R*globalT + T

pos = -globalR.transpose() * globalT <-- plot this

It worked amazingly!

while(1)

printf(“\n Thanks alot”);!!

🙂

hi ,

im also working on visual odometry project , am getting some errors in finding the same 3d points in successive stereo frames , so if u can pls send me your VO code it’ll be helpful ,

Thankyou

here is my email id: pavankumaru93@gmail.com

Hi,

Thank you for your very didactic tutorial.

I re-used the code to adapt it to my application.

I have to adjust 3D tomography images (The experimental configuration suggests that we are in a case of a 3d rigid transform and between two images, the object is mechanically rotated with a known angle + slight unwanted movements).

So, I select manually a set of common points between two images. Then I inject the coordinates of this points in A and B to find the rotation matrix and the translation vector.

From these results, I reconstruct the 3D transformation matrix (4×4) :

[ R R R T]

[ R R R T]

[ R R R T]

[ 0 0 0 1 ]

Where R corresponds to the rotation matrix and T to the translation vector.

I apply this transformation to the 3D image corresponding to the A set. After addition of the images “A” and “B” everything seems to work perfectly and the selected points seems to be well placed. However, the rotation angle found is doubled compared to the angle that the object physically done.

Strange, isn’t it ?

How did you recover the angle from R?

Hi Nghiaho,

Thanks for this post. Actually I need to find angle of rotation between two 3D point clusters so I am using this post to find Rotation matrix and http://nghiaho.com/?page_id=846 to find angles. However, the angles are a little weird.

So for debugging purposes I tried to find Rotation matrix between y=x ([0,0,0], [1,1,0]….) and x axis ([0,0,0], [1,0,0]…). I initialized 50 points in each group. However, the Rotation matrix I got has a determinant of 0?? Any idea what could be the problem?

I have implemented this in Java and OpenCV. I haven’t tested your code since I don’t have Octave or Matlab. Here is the Rotation matrix I got :

[0.70710677, 0.70710677, 0;

-9.7000784e-016, -9.7000784e-016, 1;

-0.70710677, -0.70710677, -1.3717982e-015]

Hmm without any code hard to say. I’d check line by line with my Octave code. Octave is free on Windows and Linux and maybe Macs, so it’s quick to test.

It seems the svd generated in OpenCV is different than the one generated in Octave.

My H :

[10412.5, 0, 0;

10412.5, 0, 0;

0, 0, 0]

OCTAVE :

U =

-0.70711 -0.70711 0.00000

-0.70711 0.70711 0.00000

-0.00000 0.00000 1.00000

S =

1.4725e+004 0 0

0 0.0000e+000 0

0 0 0.0000e+000

V =

-1 0 0

-0 1 0

-0 0 1

OpenCV:

S: [14725.499;

0;

0]

U: [0.70710677, -9.7000784e-016, -0.70710677;

0.70710677, -9.7000784e-016, -0.70710677;

0, 1, -1.3717982e-015]

V: [1, 0, 0;

0, 1, 0;

0, 0, 1]

This is strange, anyway to handle this?

Even the U’*U product for OpenCV is not I, its

1 0 -1

0 1 0

-1 0 1

How are you calculating H? That looks wrong.

I have constructed 2 3XN matrices A & B for the points. I am calculating the mean of each row and then creating a 3X1 vector for each of the two matrices. Then I subtract that from each column of A & B respectively. This creates two matrices AA and BB of 3XN each again.

Then I calculate H as AA*Transpose(BB)

Can you list out the commands and matrix data you use.

Hi Nghaiaho =)

This is a great page, it really helps me a lot!

I want to implement an 2D Registration algorithm (not just set the 3rd dimension = 0).

Can I simplify all different 3D algorithms (e.g. your SVD or Quaternions, Orthonormal Matrices) to 2D differently or is there only one optimal algorithm for two dimensions?

I found one here ( http://hss.ulb.uni-bonn.de/2006/0912/0912.pdf ) at the bottom of page 133 of the pdf ( = page 112 of the paper). But to what is this corresponding in 3D? It looks similar to SVD, but I’m not sure.

Kind regards, Niklas =)

I’ve never implemented a 2D version of this algorithm. But I’d imagine the 2D version to be pretty simple, without the use of SVD, since you only need to solve for a single theta angle. If you re-centre both datasets then you only need to find the angle theta to rotate point set A to B. For each point pair you can form a vector such that

A_vector = (0,0,0) -> (Ax, Ay, Az)

B_vector = (0,0,0) -> (Bx, By, Bz)

Figure out the angle between the two vectors. Do this for every pair and average them out.

Hi NghiaHo,

Somehow not able to reply to that thread so continuing here,

I’m writing in Java

Matrices (3×50):

A

[ 0, 1, 2………..49

0, 1, 2…………49

0, 0, 0…………….]

B

[ 0, 1, 2………..49

0, 0, 0…………..0

0, 0, 0…………….]

for(int i=0; i< points1.rows(); i++)

centroid1.push_back(rowMean(points1.row(i)));

for(int i=0; i< points2.rows(); i++)

centroid2.push_back(rowMean(points2.row(i)));

( rowMean is a custom function, it is calculating the correct mean per row, if you want I will post the code )

Mat points1Centered = new Mat(points1.size(), points1.type());

for(int i=0; i < points1.cols(); i++)

Core.subtract(points1.col(i), centroid1, points1Centered.col(i));

Mat points2Centered = new Mat(points2.size(), points2.type());

for(int i=0; i< points2.cols(); i++)

Core.subtract(points2.col(i), centroid2, points2Centered.col(i));

Core.gemm(points1Centered, points2Centered.t(), 1, dummy, 0, H);

Core.SVDecomp(H, S, U, V);

Core.gemm(V, U.t(), 1, dummy, 0, R);

Are your points legit? Do they correspond to real 3d points with a real transform?

What do you mean by legit?

Well A is just y=x and B is just the x axis, so the angle of rotation between them should be 45 degrees around the z axis.

Is A just incrementing X,Y from 0 to 49 and B is just incrementing X?

Yes

I have now tested this fully in Octave. Octave is producing the expected result of angles : 0, 0, 45.

With these points the H in Octave is the same as the H that Java code is generating. The difference comes after svd. The results of svd are different in java OpenCV and Octave. And I don’t know why 🙁

I think the answer is actually correct even though it looks wrong. The singular values returned are [1.4725e+04, 0, 0]. The last two singular values are numerically identical (0), so the 2nd/3rd column vectors of U can be ordered in either way. In your case, they’re just swapped. If you proceed to continue with the calculation what result do you get?

Oh I see what you mean now

Oh, Well yes that makes sense. However when I calculate R in OpenCV this is the result :

[0.70710677, 0.70710677, 0;

-9.7000784e-016, -9.7000784e-016, 1;

-0.70710677, -0.70710677, -1.3717982e-015]

Determinant is 0

In Octave R comes out as

0.70711 0.70711 0.00000

-0.70711 0.70711 0.00000

0.00000 0.00000 1.00000

determinant is 1.

Can I just swap second and third rows whenever the determinant is 0? Will that work?

Actually I just realised you have a degenerate set of points. You have a line in 3d space, which has one degree of freedom to rotate around the axis. The algorithm works if your points form a triangle instead.

Correction, as long as the points aren’t collinear it should work.

not sure if someone else has commented on this, but i think there is an error in your mapping in your code. in thinking about it, you need to subtract the source centroid off the points before applying the rotation, then when you add the offset, you still need to add back the source centroid.

You have :

Y = Rotation * X + Offset

What makes better sense is

Y = Roation * (X-SourceCentroid) + Offset + SourceCentroid;

Otherwise, if the points are distant from the origin, then you are rotation about the origin an they will have a significant offset added to them.

The Rotation matrix was computed for rotation of points with their source centroid subtracted – so it only makes sense to apply them to the points of the same condition.

and in case it helps others – here’s a C# implementation of the Kabsch Algorithm (which you describe). Look up the wikipedia page for an explanation of variables. P,Q, and the centroids are computed outside this function

this uses the emgu openvc svd code for 2D rotation. Easily extensible to 3 dimentions by defining the matrixes as 3×3 and making m[1,1] to m[2,2]

{

var p = P;

var q = Q;

//1. subtract centroids

for (int i = 0; i < p.Rows; i++) {

p[i, 0] -= SourceCentroid[0, 0];

p[i, 1] -= SourceCentroid[1, 0];

q[i, 0] -= DestCentroid[0, 0];

q[i, 1] -= DestCentroid[1, 0];

}

//2. compute covariance matrix

var a = p.Transpose()*q;

//3. compute rotation matrix

/* perform svd where A = V S WT */

Matrix V = new Matrix(2, 2);

Matrix S = new Matrix(2, 2);

Matrix W = new Matrix(2, 2);

CvInvoke.cvSVD(a.Ptr, S.Ptr, V.Ptr, W.Ptr, SVD_TYPE.CV_SVD_DEFAULT);

// Deal with reflection matrix

Matrix m = new Matrix(2, 2);

m.SetIdentity(new MCvScalar(1));

m[1,1] = ((W*V.Transpose()).Det<0) ? -1 : 1;

// Comput the rotation matrix

Rotation = W*m*V.Transpose();

Offset = DestCentroid – (Rotation * SourceCentroid);

}

If you look at the code it does this but expressed differently.

woops, offset is computed incorrectly. should be

Offset = DestCentroid – SourceCentroid;

and the mapping is

Y = Rotation * (X-SourceCentroid) + Offset + SourceCentroid;

Dear Nghia,

Thank for your post!

Currently I have similar problem:

There are 2 similar objects (their sizes might be different, i.e big/small objects). From these objects, we find 2 sets of corresponding points (s1, s2) with the same number of points.

The mission is to find the transform matrix from s1 –> s2.

But the transform matrix contains only translation and rotation, not scaling!

Actually, the complete transform should include scaling. But in my problem, original objects (obj1, obj2) have different sizes.

And I don’t need scaling because I want to know how different they are.

I see in your solution, after transformed, two sets are nearly identical to each other.

For my problem, after transformed, two sets are uniform, but not exactly the same.

Could you please help?

Thank you in advance!

Tom

Hmm, I never worked with points that involved scaling so I’m not of any help. Maybe someone else on this site has.

Thank for your comment!

When I test your python code with my data, t is not the column vector (2×1), it has 2 columns (2×2).

My data is 2D matrix (12×2)

Do you have any suggestion?

Thanks

I found the bug. I’m using array, not matrix, so I got wrong t.

It’s fixed, thanks,

many thanks for your simple explanation and codes,

I will use it in my dissertation, I hope it gives me the best.

if I use I want to have your name in the code, then I should write Nghia Ho?

be successful in whole your life.

regards

Nghia Ho will be fine, thanks.

ok,

but why transpose in m.file is on A, while you put it on B in formula for H?

The code uses a different matrix convention, Nx3, but the formula uses 3xN.

thank you.

Hello,I have a problem about ‘t’,translation vector I hope you help me:

in my project I can obtain pose of e.g points with respect to one camera(x,y,z),by help of stereo vision.

then I want to know R&t of one pose of a plate with respect to another pose of it. I use your code and I can survey correctness of ‘R’ by obtaining the angles(around x,y,z) with this formula:

http://persian-expert.com/up2/uploads/1438845512991.png

for verification my target is a board with a triangle on it,the points of corner of triangle are our markers.

but I have problem with ‘t’, don’t know how to verify it?because I don’t know which distance it represents, for more explanation please go to:

http://www.persian-expert.com/up2/uploads/1438846564971.jpg

camera in the image, is left camera(the reference). we have just rotation around y axis. I did some measurements in practice(r1,r2,…in image obtained, by stereo) and also I know the angle and distance of posesA&B to each other priori(for distance e.g I change the place of one corner of rectangle in arbitrary but known distance on the board, and for rotation I rotate the board itself in known angle), and the aim is just verification of my project.

questions:

1.when I change point sets on the board(e.g mean points of triangle’s lines) , ‘t’ changes (consider the poses(poseA&poseB) don’t change just markers or three points on the board change), why t changes?

2.’t’ is distance between origin of coordinates in two coordinate frames, isn’t it?as I don’t know origin for poseA and poseB, how to verify t?

at last what does ‘t’ represent?

sorry because of long explanation.

thank you in anticipation.

What you wrote is kind of confusing to read. But if you want to know the translation between the origin of the two sets, use -R’t (negative R transpose multiply t).

I tried to explain with details.

ok let me please:

what does T vector show?

I read that it is distance between origin of two set points.

thanks

The transform applies a rotation first, then translation (t) after to align the set of points. So you can’t read ‘t’ directly to measure distance. You need to use -R’*t.

thank

is it possible to use your code for visual odometry?

if possible how do i apply it?

Not really, visual odometry is more 2D<->3D pose estimation. This method is only really suitable for 3D<->3D.

Thanks a lot. Do you have a book reference for this?

I mention it at the beginning, A Method for Registration of 3-D Shapes’, by Besl and McKay, 1992.

please help me in this case

the target point set is generated from rotation and translation of the origin point set.

but the algorithm seems not giving the correct answer.

origin:

Point3D(-12.267, -76.253, 21.673),

Point3D(-12.711, -76.638, 20.299),

Point3D(-13.713, -75.658, 19.711),

Point3D(-14.463, -75.997, 18.788),

target:

Point3D(-12.137, -76.147, 21.373),

Point3D(-12.362, -76.959, 20.14),

Point3D(-12.498, -76.172, 18.847),

Point3D(-12.624, -77.053, 17.755),

what’s the problem

please moderate this:

hello, please register my question very soon.

I have a problem in the reason of using SVD method,

I understand the method up to covariance (H),but why we use SVD? snd then why we use R=V*U’?

i just want to write very short reason in my dissertation,so I ask this. the code was useful at all.

thank you

Look at the paper I reference at the beginning of the blog post. I just followed their instruction.

Hi,

amazing post, thanks you very much for sharing. I have successfully been using this code for some time now, but I also fell in the ‘reflection trap’. If no reflection is encountered , the RMSE is for my data around 1e-09 and the reconstruction is optimal. However, when I find a reflection, the reconstruction is somehow not correct.

Example where everything goes right

pA = [[ 0.41 0. -0.28991378]

[-0.41 0. -0.28991378]

[ 0. -0.41 0.28991378]

[ 0. 0.41 0.28991378]]

pB = [[ 0.86974133 0.28991378 0.86974133]

[ 0.28991378 0.86974133 0.86974133]

[ 0.86974133 0.86974133 0.28991378]

[ 0.28991378 0.28991378 0.28991378]]

Rotation

[[ 0.70710678 -0.70710678 0. ]

[-0.70710678 -0.70710678 0. ]

[ 0. 0. -1. ]]

Translation

[[ 0.57982756]

[ 0.57982756]

[ 0.57982756]]

Reconstruction

[[ 0.86974134 0.28991378 0.86974134]

[ 0.28991378 0.86974134 0.86974134]

[ 0.86974134 0.86974134 0.28991378]

[ 0.28991378 0.28991378 0.28991378]]

RMSE: 4.47992254088e-09

I there is a reflection, I get

Points A

[[ 0.41 0. -0.28991378]

[-0.41 0. -0.28991378]

[ 0. -0.41 0.28991378]

[ 0. 0.41 0.28991378]]

Points B

[[ 0.28991378 0.86974133 0.86974133]

[ 0.86974133 0.28991378 0.86974133]

[ 0.86974133 0.86974133 0.28991378]

[ 0.28991378 0.28991378 0.28991378]]

Rotation

[[-0.70710678 -0.70710678 0. ]

[ 0.70710678 -0.70710678 0. ]

[-0. -0. 1. ]]

Translation

[[ 0.57982756]

[ 0.57982756]

[ 0.57982756]]

Reconstruction

[[ 0.28991378 0.86974134 0.28991378]

[ 0.86974134 0.28991378 0.28991378]

[ 0.86974134 0.86974134 0.86974134]

[ 0.28991378 0.28991378 0.86974134]]

RMSE: 0.579827557986

And you see that the Reconstruction does not match Points B.

Interestingly, the RMSE is equal to the components of the translations.

I do handle the reflection as suggested

if linalg.det(R) < 0:

Vt[2,:] *= -1

R = Vt.T * U.T

Any ideas on this? Cheers!

Guido

I haven’t spent too much verifying the reflection correction. Maybe someone will know the answer. What is the effect of applying the reflection correction?

Do you have an executable or excel file that I can use to get the offset and rotation between 2 sets of 3D points.

No, just whatever Octave/Python script I posted.

I think there is a typo in the documentation of the special reflection case. You write “This is addressed by checking the determinant of V …” while the code checks the determinant of R:

if determinant(R) < 0

One more thing, when given the following input data:

A = [0, 0, -25; 0, 0, 25; 25, 0, 0];

B = A;

the reflection case handling suggested by Nick Lambert actually returns a 180° rotation even though the data sets are equal.

Hi, nice article. I am using two 3D point cloud of different sizes. Fixed one is 3×40256 and moving one is 3×40096 (stanford Bunny). Can you please elaborate how to find closest point between these two ?

For each point in A, find the nearest point in B, find optimal [R,t], apply it, and repeat until convergence.

I tried something a bit different and it didn’t work. I would like to hear your thoughts as to why it didn’t.

In your program, you have..

H = (A – repmat(centroid_A, N, 1))’ * (B – repmat(centroid_B, N, 1));

I tried..

H = (B – repmat(centroid_B, N, 1))’*pinv((A repmat(centroid_A, N, 1))’) ;

That mathematically makes no sense.

Thank you for this brilliant article. It helped me alot :).

Hi,

I am trying to develop an algorithm for robotic handling of cuboidal baggage. I want to limit my pose estimation problem to a 2d one by considering one rotation angle theta, and two translation parameters .. Since cuboidal objects exhibit 180 degree rotational symmetry, there are finite poses possible (pose classes). My goal is to implement the chamfer matching process by minimizing the euclidean distance between the stored pose template shapes and the current image scene. I would like to seek your advice on the following :

1. Do you think the idea is implementable for solving the three pose parameters mentioned above in 2d ?

2. I understand that chamfer matching is variant to scale changes , so how can i deal with scale changes between the template and the image scene ?

I look forward to hearing from you. Thanks for your time !

The chamfer method looks promising. I would start with a brute force method by trying templates of different rotation and scale. If that works you can look at ways to speed it up later.

Hi,

This seems to be PCA not ICP . Why did you say the method is based on the paper of 1992?

It’s a single step of ICP, and I got the implementation from the 1992 paper.

This algorithm is from “Least square fitting of two 3-D point sets”. The method is definitely not ICP.

Never said it was. It’s one of the four methods you can use in conjunction with ICP from Besl and McKay’s paper, and that’s where I got it from.

Hi,

Firstly, thanks for your post. it is very useful.

I have a problem.I have two set of 4 points.you can see following lines

A = [0,0,0;

0,0,1;

0,1,0;

0,1,1]

B = [10,10,10;

10,10,20;

10,20,10;

10,20,20]

When I run your octave code , I get results like

Rotation :

1 0 0

0 1 0

0 0 1

Translation :

10.000

14.500

14.500

Then, I use A matrix rows as test points

Results like :

A[ 0 0 0] => B[10, 14.5, 14.5]

A[ 0 1 0] => B[10, 15.5, 14.5]

There is a mistake. Bu I can’t find anything.

am I getting something wrong?

The algorithm doesn’t do scaling. Your A and B have different scaling, the algorithm will fail to give meaningful results.

Thank you ver muck your answer.

Can you advice a algorithm that solve my problem ?

http://nghiaho.com/?p=2208

Scroll down to the partial affine section. See if that works for you.

Actually, that only applies to 2D points.

If your points have a 1:1 mapping then you can undo the scaling of one of the point set. Translate the point to the centroid, shrink them so all the distances to the centroid are similar to the other point set. Then add the scaling back in later.

Can you give more detail if you have a time please.

Thank you,

email me directly if you get stuck

Great tutorial, thanks.

It works great as long as the shapes are similar (low noise).

Do you know maybe a robust way to superimpose two point-clouds of equal size and fixed assignment but varying shape?

Can’t say I do. Varying shape seems quite open ended, in terms of the error function.

I don’t know what you mean by “more robust”. If I understand the method he is using correctly, then it minimizes the RMS error between corresponding points from either point cloud. It can accept any pair of ordered point clouds (of the same size), regardless how similar they are to each other. Think of it this way: