Last Updated on June 20, 2014 by nghiaho12

I’ve been mucking around with video stabilization for the past two weeks after a masters student got me interested in the topic. The algorithm is pretty simple yet produces surprisingly good stabilization for panning videos and forwarding moving (eg. on a motorbike looking ahead). The algorithm works as follows:

- Find the transformation from previous to current frame using optical flow for all frames. The transformation only consists of three parameters: dx, dy, da (angle). Basically, a rigid Euclidean transform, no scaling, no sharing.

- Accumulate the transformations to get the “trajectory” for x, y, angle, at each frame.

- Smooth out the trajectory using a sliding average window. The user defines the window radius, where the radius is the number of frames used for smoothing.

- Create a new transformation such that new_transformation = transformation + (smoothed_trajectory – trajectory).

- Apply the new transformation to the video.

Here’s an example video of the algorithm in action using a smoothing radius of +- 30 frames.

We can see what’s happening under the hood by plotting some graphs for each of the steps mentioned above on the example video.

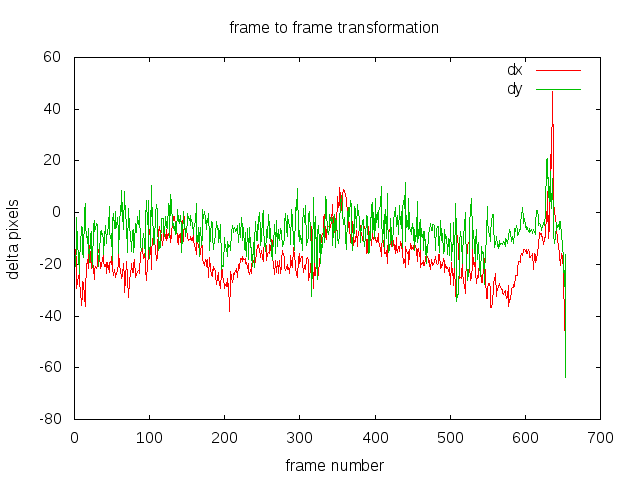

Step 1

This graph shows the dx, dy transformation for previous to current frame, at each frame. I’ve omitted da (angle) because it’s not particularly interesting for this video since there is very little rotation. You can see it’s quite a bumpy graph, which correlates with our observation of the video being shaky, though still orders of magnitude better than Hollywood’s shaky cam effect. I’m looking at you Bourne Supremacy.

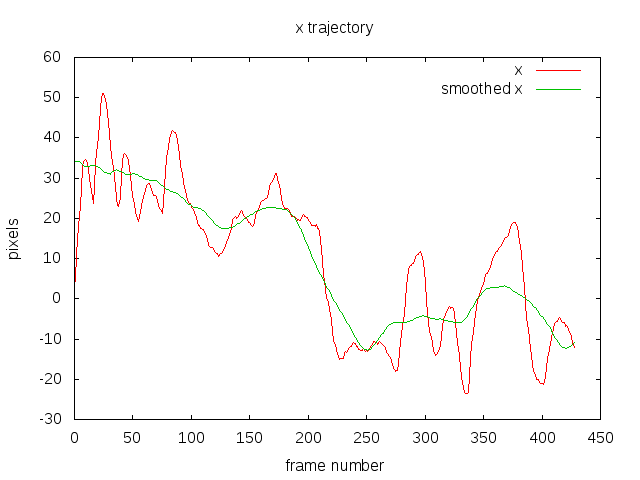

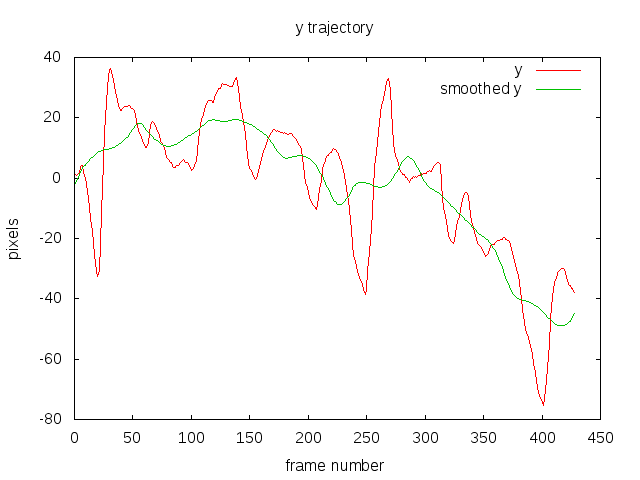

Step 2 and 3

I’ve shown both the accumulated x and y, and their smoothed version so you get a better idea of what the smoothing is doing. The red is the original trajectory and the green is the smoothed trajectory.

It is worth noting that the trajectory is a rather abstract quantity that doesn’t necessarily have a direct relationship to the motion induced by the camera. For a simple panning scene with static objects it probably has a direct relationship with the absolute position of the image but for scenes with a forward moving camera, eg. on a car, then it’s hard to see any.

The important thing is that the trajectory can be smoothed, even if it doesn’t have any physical interpretation.

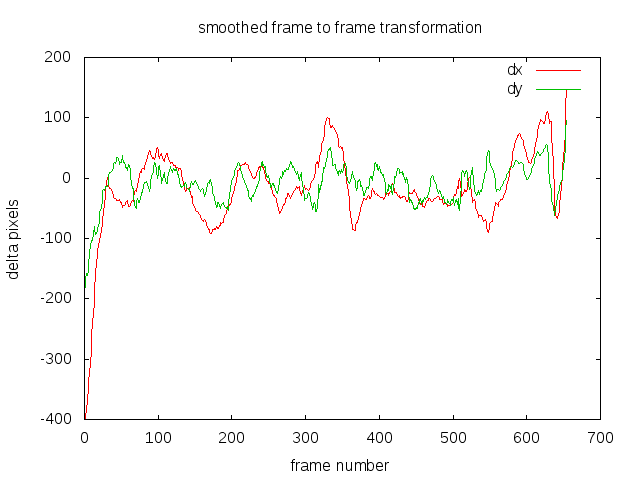

Step 4

This is the final transformation applied to the video.

Code

videostabKalman.cpp (live version by Chen Jia using a Kalman Filter)

You just need OpenCV 2.x or above.

Once compile run it from the command line via

./videostab input.avi

More videos

Footages I took during my travels.

Can this be done for a live stream from a webcamera? If you don’t have the number of frames?

No. You would need to use a slightly different technique that smoothes the trajactory on the fly. I haven’t coded this yet.

Ok. Thanks!

Hey, i am also trying with live streaming. But not getting the output. Could you please help me.

This code is not designed for live streaming. You’ll have to figure out how to adapt to it. I never intended for live and don’t have plans to.

this code is not running for stored video too. Output window is not coming.

cout << "./VideoStab [C:\\shaky car.avi] " << endl;

this path i gave but not getting stabilized video.

what else i need to do?

Umm how exactly did you run the program?

i pasted your code and give the path of my video here—–

cout << "./VideoStab [C:\\shaky car.avi] " << endl;

i have already added required directories in the property of this project.

anything else which i have to add? please tell.

How proficient is your C++? Because that code does nothing other than print to the screen. Use my original code, compile it, and open a command prompt. Find where videostab.exe is located and manually type VideoStab “C:\shaky car.avi” on the prompt.

i typed VideoStab “C:\shaky car.avi” on prompt (videostab.exe), but after pressing ENTER, this prompt window disappears. still video is not loading.

Compile the code in Debug mode. If there is something wrong it should assert an error.

its running. thank you very much.

Hi,

looking nice. Unfortunately, with my videos, I happen to have an error somewhen for each video i try :

(… 53191 good frames…)

Frame: 35192/35430 – good optical flow: 1

OpenCV Error: Assertion failed ((npoints = prevPtsMat.checkVector(2, CV_32F, true)) >= 0) in calcOpticalFlowPyrLK, file /Users/sir/opencv/modules/video/src/lkpyramid.cpp, line 604

terminate called after throwing an instance of ‘cv::Exception’

what(): /Users/sir/opencv/modules/video/src/lkpyramid.cpp:604: error: (-215) (npoints = prevPtsMat.checkVector(2, CV_32F, true)) >= 0 in function calcOpticalFlowPyrLK

Abort trap

Any idea?

http://stackoverflow.com/questions/10902881/cvgoodfeaturestotrack-doesnt-return-any-features

I guess… ?

I did not anticipate for frames that have no features on them. Try replacing with this

Mat T;

goodFeaturesToTrack(prev_grey, prev_corner, 200, 0.01, 30);

if(prev_corner.size() > 10) {

calcOpticalFlowPyrLK(prev_grey, cur_grey, prev_corner, cur_corner, status, err);

// weed out bad matches

for(size_t i=0; i < status.size(); i++) { if(status[i]) { prev_corner2.push_back(prev_corner[i]); cur_corner2.push_back(cur_corner[i]); } } // translation + rotation only T = estimateRigidTransform(prev_corner2, cur_corner2, false); // false = rigid transform, no scaling/shearing } // in rare cases no transform is found. We'll just use the last known good transform. if(T.data == NULL) { last_T.copyTo(T); }

Thanks a lot. I tried that but It didn’t help, so I debugged the error carefuly and finally found that it goes back to QTKit.

Randomly, mostly after some 1000s of frames but deterministically at the same frame each time, the video streams input turns black on Mac OS and doesn’t recover but only load blacks frames from there on. Therefore, no more features are found. This happens for various input movie formats, completely unspecific.

Unfortunately, like this, opencv is unusable for me under Mac OS.

(…)

Features matrix size : 122

STATUS SIZE 122

Good Matches : 122

Frame: 2536/10000

Features matrix size : 118

2014-04-29 16:57:03.071 QTKitServer[30206:903] *** __NSAutoreleaseNoPool(): Object 0x11bf10 of class NSCFArray autoreleased with no pool in place – just leaking

2014-04-29 17:03:07.418 QTKitServer[30206:903] *** __NSAutoreleaseNoPool(): Object 0x20df50 of class NSCFArray autoreleased with no pool in place – just leaking

And the frame is black from there on, then

calcOpticalFlowPyrLK(A, B, pA, pB, status, noArray(), Size(21, 21), 3,

TermCriteria(TermCriteria::MAX_ITER,40,0.1));

inside

cv::Mat cv::estimateRigidTransform( InputArray src1, InputArray src2, bool fullAffine )

in

lkpyramid.cpp crashes with exception.

Still, thanks for the code snippets, I really appreciate what you do 😉

Well that’s a shame. My untested code snippet is meant to protect against a black frame with no features. When it occurs it jut uses the last good transform.

well ur code does this when it runs

1.count the total no of frames in a video and find the good optical flow between the successive frames for the whole video

2. After finding optical flow for whole video,then only popping the 2 output videos,,one as input shaken video and other is stabilized video.

BUT I WORK FOR LIVE STREAMING VIDEO..where

1 .I have to simultaneously find the optical flow between the frames and the pop up the output video (stabilized) screen. (i know ur code is for stored video, when we run it with live video,,it will keep on counting the frames and calculating the optical flow ,it never ends and never pop up the output screen)

,,, I m trying ,,,could u help me little bit in this part?,,Thanks in advance

I guess you can re-use most of the code and only average from [past frame, current frame], instead of [past frame, future frame].

I am really interested to see the way your algorithm works. But I could not find a suitable video to check it. Please send me the videos you have posted above or some other suitable video to check it with myself. Thank you.

http://nghiaho.com/uploads/hippo.mp4

Thank you very much. I used visual studio environment with opencv. It is amazing and very useful kind of an algorithm. I am studying about that algorithm as I am new to this field. Could you send other two videos also please.

http://nghiaho.com/uploads/Vietnam_Kim_Long2.avi

Can’t seem to find where the other video is uploaded. It’s trivial to find a video to use on Youtube. Just search up any animal footage taken by an amateur.

Thanks

Hi Sanjay,

Can you please share visual studio opencv setup which you have used. Even I am interested to understand this algorithm and I am new to this field too.

Hi… Good work.

But take care, The estimateRigidTransform use a uniform scaling too. see:

http://docs.opencv.org/modules/video/doc/motion_analysis_and_object_tracking.html?highlight=estimaterigidtransform#Mat%20estimateRigidTransform%28InputArray%20src,%20InputArray%20dst,%20bool%20fullAffine%29

Good point, missed that one!

That means estimateRigidTransform is not actually a “Rigid Transform”, in fact it is a “Similarity Transform”.

Thank you very much for your code.I have modified the smooth based on kalman filter,It can be used for live stream.how can i send the code to you?

Feel free to email me and I’ll post it up. Don’t forget to put your name in the cpp file so you get credit!

That’s exactly what I’m looking for!

Any news on that code doing live stab?

And what do you think about the videostab module in OpenCV?

Code is up in the download link. Ihaven’t tried OpenCV’s videostab module so can’t comment.

HI Chenjia,

How did you manage to smooth based on kalman filter for live stream.

Can you please share the code or can you email me at mohan.bankar@gmail.com.

Check the download link

hi nghiaho,

Can you please post/share the links of the above videos or any other videos you used for video stabilization so that I can check myself.

Check the download link

Thanks alot for sharing the shaky videos 🙂 videos on stabilising looks very good.(Y)

Where is the download link?

On the page

Hi nghiaho,

After performing the warp affine transformation of frames, how to overcome the black border I am trying to fill in missing frame areas in a video. By deffault few Opencv provides border extrpolation methods like- REFLECT,REPLICATE,WRAP.

Is it possible to fill the missing area from neighboring frames so the that video completion is pleasant. Any suggestions or reference would be appreciated on this. Thank you in advance.

I believe you can attempt to use many past images (or future) to fill in the gap, by aligning those to the current and filling whatever black pixels you can.

HI Nghia Ho,

Thank you for your nice work.

I have experienced following issues, could you please help me?

1. I could not test your code with a long video (about 2 minutes avi file).

The error was occurred. It seems out of memory???

How long the video can be?

2. I could not test your code with a mp4 format video

3. Could you please provide the original paper regarding to this algorithm you used?

Thank you in advanced!

Hi,

The algorithm tries to load all the video images in memory that is why. MP4 is dependent on ffmpeg, so make sure you your ffmpeg supports it.

I don’t have any original paper, it was kind of based on intuition.

Hello, Sorry this is a bit of a newby question. I cannot get videostab.cpp to compile. I think I may be missing links to the opencv libraries out of the command to compile with gcc but I’m really not sure.

Would appreciate if anyone can help.

S

Error?

I got it working thanks.

Hi, fantastic code. I am a newbie to OpenCV. I have tried it and it works but when I display the final images of the stabilized bike video the images walk on the street from the upper left to the down right, why? wasn’t the images supposed to be steady?

Video example?

here: http://tinypic.com/player.php?v=atvvis%3E&s=8#.VAQD-Ut-_8s

It smooths the motion using images before and after the current frame. Towards the end there are more images before than after, so it’s unbalanced and hence why you see that affect. Same story with the starting frame.

Now it makes sense! thanks!

In this code, when we smooth trajectory we don’t handle left and right side, one option is to pad time series and then apply average smoothing.

Thank you for creating an excellent algorithm:) is it Possible to download a compiled version of the application?

I’m using Linux, so no. You’ll have to compile yourself.

I’m new to OpenCV and this code works out of the box. Good job and thank you! This gives me a good starting point for my own experiments.

Glad you found it useful 🙂

Hello,Thanks for your code,iam running this code in visual studio enviorment.when i run it it sunndenly craches and said “videostab.exe has triggered a breakpoint.”

if (pBlock == NULL)

return;

RTCCALLBACK(_RTC_Free_hook, (pBlock, 0));

retval = HeapFree(_crtheap, 0, pBlock);

if (retval == 0)

{

errno = _get_errno_from_oserr(GetLastError());

}

}

there is a breakpoint in this line: if (retval == 0)

what should i do now?

thanks a lot for your help

Looks like it is out of memory. My original code is not meant for long video because it loads it all in memory. Try the Kalman filter version that was submitted by another user.

Thanks for your reply,My system memory is 8 GB,i have an other system which have Linux operating system,its memory is also 8 GB but the code runs with out problem,i this the problem is related to Visual Studio

But if anybody can help me how to run in windows,i would be very happy to know!

Visual Studio might be generating a 32bit executable instead of 64bit.

Please help me to compile your code videostab.cpp in opencv. I have downloaded it but can not compile it as Im new in OpenCv.

what have you tried

Initially i have downloaded OpenCV and then extracted the zip folders but I have not installed anything. Then

I tried to compile the videostab.cpp on VC++ and TC++ but it is generating an error. The prog could not search the opencv2 folder which I have included. so without compilation I can not execute the same at cmd prompt.

Tell me the steps so that i can run it in win7 environment.

You’ll have to look at some tutorial to set it up in a win7 environment. I use Linux exclusively.

Ok. then plz tell me the steps of running it in linux environment. I already have the opencv folder which i have extracted from the downloaded zip file. Do I need to install anything else before compiling the cpp file? can I simply compile it using cc comand in linux??

Assuming your OpenCV is installed correctly on Linux (steps on OpenCV website), then you can do

g++ videostab.cpp -o videostab -lopencv_core -lopencv_highgui -lopencv_imgproc -lopencv_video -O2

hey, actually i m not good in c language i would like to have the program video stabilization for any already existing video in our pc. plz can u provide that in whole c program

Have you looked at Deshaker? I don’t have time to make a windows executable that works on a full video.

I tried some videos taken from you tube with this algorithm. But they did not work. I was unable to find good sample videos other than the two videos you posted to test this algorithm. Please give some links of some samples videos taken from you tube or somewhere else. Then I can download and test them. Please post at least 10 links.

I don’t have any links on hand. I’m occupied with other projects at the moment.

Dear nghiaho,

Please tell me why the last frame is not processed. What is the theory behind that.

Your code snippet is given below.

while(k < no_of_frames-1) { // don't process the very last frame, no valid transform

It’s something along the lines of this. If I have frames 1, 2, 3 then I have transforms between

1 and 2

2 and 3

So only 2 transforms for 3 frames.

Thanks

Dear nghiaho,

How did you plot the graphs posted on this page? What is the software you used to plot the graphs and how did you do that? Thanks

gnuplot

The code outputs data to text file that can be plotted.

Dear nghiaho,

There are two motions in a video sequence called desired motion and jitter. For example a moving car is an desired motion.It is important only to stabilize jitter only not the desired motion. Please tell me which line/ lines in your code differentiate desired motion and jitter. Is it done in estimateRigid Transform() Method

The code doesn’t really distinguish either of these. It just tries to find the scene motion that’s best described by the optical flow. This is done via estimateRigidTransform.

Hi there, i’m working on a project and i have a couple of questions about your code :

1. The two variables CV_CAP_PROP_FRAME_COUNT (line 75) and CV_CAP_PROP_POS_FRAMES (line 205) are ‘undefined’ according to my IDE (visual studio). What can i do about this?

2. When i compile in Debug mode, i get this error :

warning: Error opening file (../../modules/highgui/src/cap_ffmpeg_impl.hpp:537)

OpenCV Error: Assertion failed (scn == 3 || scn == 4) in cv::cvtColor, file C:\builds\master_PackSlave-win64-vc12-shared\opencv\modules\imgproc\src\color.cpp, line 3480.

Can you tell me more about this? I’m totally new to OpenCV and I hope you can help.

Regards

This is probably a difference in OpenCV version. Try cv::CAP_PROP_FRAME_COUNT as an alternative. If that doesn’t work you’ll need to dig up the documentation for your version and see how it’s done.

The last error might be because the video is not being opened correctly, hard to tell without line number within my code.

Thank you for your help, it worked wonderfully! Another question though : how can I get the output video back? I mean, only the stabilized one.

Again, keep up your amazing work !

Regards

Towards the end of videostab.cpp there is some commented out line that writes out the jpeg image.

It’s Multi-Trajectory Mapping and Smoothinh model??

What’s the transformation of the frame?

Please send me an explanation.

I have no idea what it’s called. I just wrote this over a weekend, the algorithm seemed obvious in my head at the time. The transformation of the frame is in step 2 and 3 of my blog post. The transform is the difference between the current trajectory and smoothed trajectory,

Did you use netbeans or eclipse for this progam?

Codeblocks

Hi, I have a problem in line 63, and I don’t know why

Expression: Cap.isOpened()

It either can’t find the file or can’t open the file (codec not supported).

thank you for your video.

And I am confused for 2 lines and i need your help.

Firstly, in this command : “goodFeaturesToTrack(prev_grey, prev_corner, 200, 0.01, 30);”. The process that you apply to find the max corners, quality level and mindistance which are 200,0.01 and 30 respectively in you code.

secondly, these lines :

for(size_t i=0; i < trajectory.size(); i++) {

double sum_x = 0;

double sum_y = 0;

double sum_a = 0;

int count = 0;

for(int j=-SMOOTHING_RADIUS; j = 0 && i+j < trajectory.size()) {

sum_x += trajectory[i+j].x;

sum_y += trajectory[i+j].y;

sum_a += trajectory[i+j].a;

count++;

}

would you mind explaining me more clearly here? why it is i+j?

thank you very much

For goodFeaturesToTrack, it’s maximum 200 corners, 0.01 is some threshold (check OpenCV manual), 30 is pixel distance between features.

The second one smooths using past and present data. It is looking back N frames and forward N frames, where N = SMOOTHING_RADIUS.

Yes. I would like to ask you there. The process you follow to set up those values for maximum corners, quality level and pixel distance. .

Thanks.

I need the features to be spread over the downsized image, not too close to each other. Those values were picked to meet this goal.

Hi Ho,

When I try your code with my video.

It shows me some errors :

penCV Error: Assertion failed (ssize.area() > 0) in resize, file c:/my/ceemple/opencv/modules/imgproc/src/imgwarp.cpp, line 2664

This application has requested the Runtime to terminate it in an unusual way.

Please contact the application’s support team for more information.

terminate called after throwing an instance of ‘cv::Exception’

what(): c:/my/ceemple/opencv/modules/imgproc/src/imgwarp.cpp:2664: error: (-215) ssize.area() > 0 in function resize

and when I check with debug. My code stuck at ” if(i+j >= 0 && i+j < trajectory.size()) " and it shows me warming that comparision of unsigned integer is alway true.

Thanks for your help

HI ho,

When I check with your video. It’s alright but when I check it with my video ( 640×480, data rate : 526kbps, frame rate: 5 frames/second. It shows me that error.

Hi i tried more with some videos and I’m really confused. Below is the link of my two videos that can run normally and another can not.

https://app.box.com/s/q0g7asppaeoix7f6q9u74vufqr4hquiy

Can you tell me what the problem is ?

Either resize your video so it is smaller or play around with

goodFeaturesToTrack(prev_grey, prev_corner, 200, 0.01, 30);

maybe increase 200 to 1000. These parameters were tested only on my video. Maybe there’s not enough features between frames.

I solved that problem already. There are issues at the end of collected video. Now I have a new problem, at the second code, chenjia fortified some to save video using Video Writer function, but as I know, if we do not provide any frame inside like write frame function, we cannot save video right? At the second code, when i program like that and turn on the stored video, It shows ” cannot render this file”.

Hi I upgrade this command :outputVideo.write(canvas);

at after these lines :

if(canvas.cols > 1920) {

resize(canvas, canvas, Size(canvas.cols/2, canvas.rows/2));

}

and now it can save the video for comparison already.

Many thanks but I assumed that if the frequency of vibration is quite high, this algorithm seems not be much effective right? Can you give me some advice to stable camera at high frequency .

There shouldn’t be any problems with high frequency other than motion blur messing up the image.

I assumed that when I run at the high frequency, because our algorithm based on finding expressive features and my webcam’s photos at that time are blurred, so it can not stable effectively. Therefore, in this situation, can you recommend me some algorithms to solve my problem.

Thanks for your support very much.

From my experience goodFeaturesToTrack + optical flow works with some motion blur, which I found surprising. But with more extreme blurs I don’t know of a good way to solve it.

Hi phivu how did you solve your problem, i have translated this code on python , but my video doesn’t change. It seems the same video.

Hi Ho,

Now i understood your code already. But I would like to ask you about the code and software you used to plot the acceleration for dx and dy.

Thank you very much for your support

I use gnuplot

And What is your algorithm or code to calculate acceleration between two frames. Thank you very much for your help.

It uses an OpenCV function estimateRigidTransformation. I don’t know how they implement it.

I understood and programmed in Matlab already. Thanks for your help very much.

Hello,

Could I get to know how much the camera has tilted between frames? I am trying to get the pixel change of detected targets, for this I have to add the number of tilted pixels to detected objects’ pixels. And in order to do that I have to know much does it tilt. Any thoughts on how to acheive this?

Note: I am doing on live stream, not a recorded video.

You can take snippets from the code. The bit that calculates dx/dy from estimateRigidTransform is what you want.

Hey!

Actually I wanted to use your code to pre-process my data which is a set of shaky videos. The code is running completely fine however I want to save the stabilized video so that i can do subsequent work on it. As all my work is on python I am not quite familiar how to change your code to make it save the stabilized video. Can you please tell me how?

Thank you

Around like 250 in main.cpp there’s some code that does saving. You can uncomment it out.

Thanx for the reply! I uncommented those lines but I can’t see where the images are being written. Is it like some kind of error or am I doing something wrong? The code ran successfully so it can’t be an error. Also I’d prefer if i can get the saved file in the form of video and not frame images. If possible can you assist me with the respective code please?

Thank you

You need to create a subdirectory called “images”. I haven’t had much success using OpenCV to generate video files. That’s why I output images and use some other software to convert them a video file.

Thanx! It worked. I am planning to do the same thing now (convert frames to video) . Really appreciate your help.

Great job !

Is ther a way to write the processed video in a avi files, and restore the original sound track ? I succeeded to write images using ” imwrite(str, cur2);” but I lost the synchronisation with sound.

Very good job anyway,

Joris

Not that I know of. OpenCV has very limited video support, let alone sound.

Hi Nghia,

Have any thoughts on how one might try to remove motion completely, rather than smoothing the motion? Obviously the virtual view may wander completely off the frame but this is acceptable in my case. Any thoughts would be greatly appreciated.

Cheers,

Chris

That is only applicable if your camera is static eg. tripod. All my test videos involve real camera motion that makes no sense to eliminate.

Hi! I’m just curious. Smoothed trajectory is always accumulate pairwise transformations. How do stabilized image recovered to origin in case when camera is moved in arbitrary direction and then becomes fixed.

This will work as expected because it’s only smoothing in a small window. When the camera stops moving the stabilization won’t apply any correction.

I just don’t get the main steps of this approach… Every time we calculate pairwise affine transfomation and the accumulate with previous transforms. If T_i is affine transformation between i and i-1 frame, then cumulative transform will be:

C_i = T_i * T_i-1 * T_i-2 * … * T_1

Than we smooth C_i by exponential or Kalman filter (or any other). The resultant smoothed transformation C*_i is then used to compensate motion. Am I right?

That’s correct. The graph tries to explain this process.

This compiled and ran first try on my Mac. Sweet!

I did get one compiler warning that could indicate a bug…

videostab.cpp:159:20: warning: comparison of unsigned expression >= 0 is always true [-Wtautological-compare]

if(i+j >= 0 && i+j < trajectory.size()) {

~~~ ^ ~

Thanks for sharing your code 🙂

The warning is fine. It’s just that trajectory.size() returns unsigned, while i and j are signed. No big deal in this case.

The issue is that in the expression i+j, j gets converted to unsigned, so i+j is always >= 0. For example, when i=0 and j=-3, i+j = MAX_INT-2 rather than -3. So the check for i+j >= 0 isn’t actually doing anything — it always succeeds. If that check is not needed, you could just remove it from the code.

Good point.

1.Why is the result video better with your method than with Kalman filter added method? I’m a little confused.

2.Why the two methods present by you can’t deal with high-frequency jitters,the result is a disaster. Which part can I revise to achieve better result? I set smaller SMOOTHING_RADIUS, but it doesn’t seem better.

1. The Kalman has tuning parameters that you need to play with. It’s meant for online application.

2. You want a larger SMOOTHING_RADIUS.

i tried to run your code but the video is not playing. the .exe will appear and disappear. no error is shown. what is the mistake?

Run it in the command prompt. You’ll see the error message this way.

Hi,

i’m trying to understand your code http://nghiaho.com/uploads/videostabKalman.cpp

I can use webcam for live stabilization and it work very well but the output is a video with black borders. I want to crop the image so that i get i clean stabilized video witout black borders and i read that i can use warpperspective but how can i get corners from your code? I need a 3×3 matrix to get warpperspective but warpaffine is a 2×3. Could you suggest me a solution?

Thanks

I don’t know what method you are trying to apply but I’m pretty sure warpaffine will suffice.

Hi, I think your code is fantastic. However, I input a video recording that is in portrait mode, the output video is in landscape mode. How could the code be changed to preserve the input’s orientation? Thank you!

You’ll have to manually insert code to rotate the image just before saving them out.

Cool, I got it to work. Could I use your code (slightly modified) for a non-profit open-source university project? How should I cite you?

Sure go for it. I guess you can cite me by name and website. What project is this for?

Hey!!!

It seems some people tried to use your code on live streams? We need something like that, can we try it?

Sure go for it.

I tried writing the same program in MATLAB but I am not able to find the appropriate functions for every function used in your code. Can you please provide the MATLAB implementation of the same code if possible?

Sorry no can do. I don’t use Matlab for computer vision so can’t help you there.

Hi,

I would like to know why the stable video on the right side of the part is missing a part. Is it because of the stability of the numerical overflow or other problems?

It’s missing pixel information. When you stabilize you shift the image around. That shift can cause areas where there are no pixels in the original image

I am not familiar with C++. From what I understand from the code is that in the first step, optical flow is computed for all the frames of the video, depending on the strong corners detected on the image. From all the corners detected, we are weeding out the bad responses. From the left over responses, image trajectory is found for all the frames of the video, which are further smoothed out using sliding averaging window in third step. After this, the new transformations are applied to the video. Kindly correct me if I am wrong at any point or you want to add anything to it. Thank you.

Yep that’s correct.

Nice! It looks like you lose a bit of sharpness in the stabilized version, but I assume with higher res input you can reduce that a bit.

It works with your vids, but somehow I cannot come-up with a video format / codec for other movies that work. The program crashes. What’s the secret?

For instance a youtube .mp4 vid downloaded with youtube downloader (dvdvideosoft.com) crashes.

What’s the error?

Running on Win7 i7, 16 GB, 64 bit, VS2015, openCV 2.4.13.0

warning: Error opening file(../../modules/highgui/src/cap_ffmpeg_imp.hpp:537)

Assertion failed: cap.isOpened(), file d:..(path)..\videostab.cpp, line 79

Line 79 contains the assert(cap.isopened()); statement.

Until now I have not worked with video in openCV. But the fact that your example videos work suggests that for that type it is configured properly. I have been experimenting with openCv and Python, not C++.

Thanks for supporting a by now 2 year old post.

Tried again, I get OpenCV Error: Bad flag (parameter or structure field) (Unrecognized or unsupported array type) in cvGetMat, file C:\builds\2_4_PackSlave-win64-vc12-shared\opencv\modules\core\src\array.cpp, line 2482

2.4 is pretty old now, I’d try OpenCV 3.x.

The functions calcOpticalFlowPyrLK() and the function estimateRigidTransform() can throw an exception that needs to be caught and handled correctly.

Furthermore the matrix last_T is unitialized if, when processing the second frame, the first frame does not contain any good features to track. This would happen if the video starts with a uniformed colored picture such as a fading up from black.

Hi Nghia Ho.

Thanks for a very good and educational example of image stabilization. The live (and the not-live) example has a few shortcommings for tacking good features. This can lead to the program exits.

Here is a new version of the code for the live version fixing this.

https://dl.dropboxusercontent.com/u/5573813/opencvtest.cpp

The changes are marked with ‘PMM’. Feel free to use it and post it.

Modifications are:

Modifications:

1. T is declared twice (not a problem – but still).

1. last_T needs initalization in case first frame has no good features to track.

2. calcOpticalFlowPyrLK() can throw an exceptions. Needs catching and handling.

3. timateRigidTransform() can throw an exceptions. Needs catching and handling.

I am a bit uncertain if the use of prev_corner is still correct in the code in case where exceptions are thrown.

You can try the old code and the new code with this clip:

https://dl.dropboxusercontent.com/u/5573813/LES_TDS_launch.mp4

This will make the old code exit/die. The new code can handle it.

You are welcome to download and use the video clip on your website as an example. If you try the clip, you will see that the stabilizer has a hard time stabilizing the horizont, which surprised me.

Best regards

Peter Maersk-Moller

This works perfect. but I found a problem with this line.

cur2 = cur2(Range(vert_border, cur2.rows-vert_border), Range(HORIZONTAL_BORDER_CROP, cur2.cols-HORIZONTAL_BORDER_CROP));

the output cur2 is missaligned…. when I remove that line the video show perfect

Hmm what OpenCV are you using? Wonder if they changed some underlying behaviour.

Its OpenCV 3.1

hello,the code website missed;

https://dl.dropboxusercontent.com/u/5573813/opencvtest.cpp

could you upload a new one?

thank u;

zhangtian

Hi, Thanks for your post and contribute.

It was a great help in my studying OpenCV.

I tried your code.

It is working well for happo video.

But not so well for Vietnam road.

Furthermore it seems that it is not suitable for video that moves rounds an object.

I think it is because of the feature tracking.

If we use SURF or SIFT, the result will be more robust, but much time consuming.

Looking forward to hearing back from you about the solution.

Kind Regards.

ZhangYun

The Vietnam road looked fine to me. The technique assumes most of your features on the background and not a foreground object.

Very nice code, thanks for this! I am new to the image stabilization field and one thing I am trying to understand is how to quantify/qualify the degree of stabilization once its done. Is there a standard metric that is used? To clarify further, I would like to understand if there is a mechanism by which I can take a sample video, destabilize it somehow, and see how much I can recover with respect to the original sample. Is that even doable? Any help will be greatly appreciated!

As a first try you can run some basic stats on the optical flow from frame to frame to get some metric for how shaky the video is.

Hi, works great but we getting black borders – how can we crop it or fill the area?

Cropping is easy, just change HORIZONTAL_BORDER_CROP. Filling in area is harder.

Hi, could I know about your environment?

I can’t compile the code under opencv 3.0 + MSVS2013.

It just return 0 even though I command out almost everything and only

“VideoCapture cap(0);

if (!cap.isOpened())

cout << "ERROR" << endl;"

left.

Thank you !

Oh, I try again. It works.

But throw an exception at

“calcOpticalFlowPyrLK(prev_grey, cur_grey, prev_corner, cur_corner, status, err);”

Still looking for the reason…

I know the reason.

I should use Release mode to compile.

But for some unknown reasons… the stabilization turned out to be more unstable one…

At least one of the parameters passed is probably bad. Check to make sure the images passed in are valid. Check to make sure prev_corner is not empty.

Hi, thank you so much for your article.

I’ve tried it, I have no error in my code.

But when I run the command prompt disappear and no result

Could you help me?

Thank you

Can you run the exe from the command prompt and not the IDE and see what it outputs.

yes I can, but it’s only about 1 second then it disappear

Hi, I have successfull, just close this part:

if(argc < 2) {

cout << "./VideoStab [video.avi]" << endl;

cin.get();

return 0;

}

and put my video here:

VideoCapture cap("video.avi");

Thank you so much for your great code 😀

Hi,Ayuchan!I encounter the same problem with you.But when I used your method,I met this’error C2374: ‘cap’ : redefinition; multiple initialization’.Could you show me your code or give me some adivce?This is my email 2452309811@qq.com.thank you!

HI, I put this in the code:

cout << "./VideoStab [video.avi]" << endl;

cin.get();

It can hold the command prompt until I type the video, but after that still no result

Hi, thanks for all the work you’ve done and for so many answers posted. I recently installed OpenCV 3.1.0 on my Win7Pro laptop(version containing \x64\vc14 folder). I ran OpenCV tutorial code fine so I know my PC and VSE setup is good.

Should I expect your videostab.cpp code (which I prefixed with “OpenCV_”) to run fine when compiled in x64 Debug mode from my Visual Studio Express 2015 environment? I am not using full-blown VS like most others, it appears.

When I execute the program from command line (i.e. “OpenCV_videostab hippo.mp4”) I immediately get a crash and a Windows message that the program has stopped working (and it wants to send information to MS).

The only thing in the OpenCV_videostab.log file is:

OpenCV_videostab.cpp

c:\users\jp\documents\visual studio 2015\projects\opencv_videostab\opencv_videostab.cpp(91): warning C4244: ‘initializing’: conversion from ‘double’ to ‘int’, possible loss of data

OpenCV_videostab.vcxproj -> C:\Users\JP\Documents\Visual Studio 2015\Projects\OpenCV_videostab\x64\Debug\OpenCV_videostab.exe

and this line 91 warning is mostly harmless, I presume. If this is expected to run using VSE, how can I get more information on the crash, and even better do you know what the cause could be?

p.s. the only thing I had to modify in your original code was to add

#include “stdafx.h”

as prompted by VSE.

It *should* work with any Visual Studio. Try stepping through the code in the debugger or add lots of print out to see where it crashes. It might have crashed as early as loading the video.

I just reviewed all the posts again and saw Emma’s about having to build the code in Release mode, so I tried that with my VSE and now it runs! If somebody wants to explain why Debug mode fails I would appreciate it, otherwise it appears my problem is solved.

Hi!! I ma working with thermal footage and unfortunately, in order to keep the thermal info (instead of the colorscale video frames have to be in, so my pixels in each frame have a value for temperature instead of a 0-255 of grayscale or colorscale), I have to work with frames instead of a video file. Would it be possible to run your code on a set of frames directly?

Thanks a lot in advance!

If you can make your thermal video look like a normal image then you have a chance.

Thanks a lot for your code!!

I just had a small doubt…it might look trivial but please help to clarify as it is really annoying me.

When we are transforming previous frame with the smoothed trajectory then the frame we obtained should be the corrected version of current frame.But when we are displaying the original and stabilized version then its previous frame and the transformed previous frame.

Shouldn’t it be current frame and transformed previous frame.Please help!!!

Hmm it’s been a while since I looked at that code. There could be an off by 1 frame somewhere. Show me your code fix so I can see what you mean.

Do you have the links to the original shaky videos ?

Look through the comments, search for nghiaho.com.

How can i run this code from linux terminal?

Typically g++ videostab.cpp -o videostab -lopencv_core -lopencv_highgui etc. etc. The library linking depends on what OpenCV version you installed because they changed the naming for newer version.

Hello my video input file has property of 10 frames/seconds which parameters should i change, that program do normal stabilization??? Thanks in advance

There’s no explicit parameter for frame rate. The smoothing is controlled via const int SMOOTHING_RADIUS = 30.

Hi, I have been trying to debug your code in Visual studio but it just doesn’t show any output. It says the program has exited with code 0. I am using OpenCV 3.1 in Windows 10. I am using 64 bit debugger in Visual studio.

I have tried finding where the code hasn’t been working by placing cout and found that it is running fine until the end of step 1 i.e the ‘Frame: good optical flow : ‘

I’d appreciate if you could help me in this matter.

Thank you

Try using this video http://nghiaho.com/uploads/hippo.mp4 to see if it bombs out.

No, it doesn’t work.

1. Try compiling in Release mode

2. Try stepping using Visual Studio debugger

Thanks a lot

Your code works great.

Could you please suggest something for stablization of thermal videos? I have applied the basic approaches for optical videos but since the number of features are almost half in case of thermal videos , there is no success. I shall be highly obliged if you can guide me in this regard.

Play around with this line

goodFeaturesToTrack(prev_grey, prev_corner, 200, 0.01, 30);

Increase 200 and shrink 30.

More in general…. objects passing inside the scene and going out like to people, birds, ants, etc make dirty good feautures to track…..

May be setting minDistance parameter up or anything else improve “the quality” feautures?

#!/usr/bin/env python

import cv2, sys

import numpy as np

import pandas as pd

if len(sys.argv) < 2:

print “Usage: vs.py [input file]”

exit()

fin = sys.argv[1]

cap = cv2.VideoCapture(fin)

N = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

fps = int(cap.get(cv2.CAP_PROP_FPS))

status, prev = cap.read()

prev_gray = cv2.cvtColor(prev, cv2.COLOR_BGR2GRAY)

(h,w) = prev.shape[:2]

last_T = None

prev_to_cur_transform = []

for k in range(N-1):

status, cur = cap.read()

cur_gray = cv2.cvtColor(cur, cv2.COLOR_BGR2GRAY)

prev_corner = cv2.goodFeaturesToTrack(prev_gray, maxCorners = 200, qualityLevel = 0.01, minDistance = 30.0, blockSize = 3)

cur_corner, status, err = cv2.calcOpticalFlowPyrLK(prev_gray, cur_gray, prev_corner,None)

prev_corner2 = []

cur_corner2 = []

for i,st in enumerate(status):

if st==1:

prev_corner2.append(prev_corner[i])

cur_corner2.append(cur_corner[i])

prev_corner2 = np.array(prev_corner2)

cur_corner2 = np.array(cur_corner2)

T = cv2.estimateRigidTransform(prev_corner2, cur_corner2, False);

last_T = T[:]

dx = T[0,2];

dy = T[1,2];

da = np.arctan2(T[1,0], T[0,0])

prev_to_cur_transform.append([dx, dy, da])

prev = cur[:]

prev_gray = cur_gray[:]

prev_to_cur_transform = np.array(prev_to_cur_transform)

trajectory = np.cumsum(prev_to_cur_transform, axis=0)

trajectory = pd.DataFrame(trajectory)

smoothed_trajectory = pd.rolling_mean(trajectory,window=30)

smoothed_trajectory = smoothed_trajectory.fillna(method=’bfill’)

smoothed_trajectory.to_csv(“smoothed.csv”)

new_prev_to_cur_transform = prev_to_cur_transform + (smoothed_trajectory – trajectory)

new_prev_to_cur_transform.to_csv(‘new_prev_to_cur_transformation_2.txt’)

T = np.zeros((2,3))

new_prev_to_cur_transform = np.array(new_prev_to_cur_transform)

cap = cv2.VideoCapture(fin)

out = cv2.VideoWriter(‘out.avi’, cv2.VideoWriter_fourcc(‘P’,’I’,’M’,’1′), fps, (w, h), True)

for k in range(N-1):

status, cur = cap.read()

T[0,0] = np.cos(new_prev_to_cur_transform[k][2]);

T[0,1] = -np.sin(new_prev_to_cur_transform[k][2]);

T[1,0] = np.sin(new_prev_to_cur_transform[k][2]);

T[1,1] = np.cos(new_prev_to_cur_transform[k][2]);

T[0,2] = new_prev_to_cur_transform[k][0];

T[1,2] = new_prev_to_cur_transform[k][1];

cur2 = cv2.warpAffine(cur, T, (w,h));

out.write(cur2);

cv2.waitKey(20);

Hi!

In this line: cv::calcOpticalFlowPyrLK(prev_grey, cur_grey, prev_corner, cur_corner, status, err);

All elements in status are 0 meaning no match found, however the grey images seem fine. I had to convert them to CV_8U:

cur_grey.convertTo(cur_grey, CV_8U);

, otherwise program crashes with assertion fail.

any ideas?

Maybe you’re loading non 8 bit images?

Hello. Help me solve the problem, I need to determine whether a person is breathing on video. I want to plot the motion of points by comparing each frame to get a curve. Can I somehow use your code? If I will display your trajectory on a graph, can you assess the person’s breathing?

No. There was an academic project that did exactly this a few years ago, I can’t remember the authors though,

Thanks, it helped a lot in my project …! you are awesome 🙂

Dear Nghia Ho!

Thank you for your sharing! It works! However, I found that if the input is a stable video, the output will be a unstable video.

Is there a problem here? Thanks again!

The parameters might need tweaking, they were tuned for the video size I used,

thanks a lot!

hi~Can you share the plot scripts using Gnu plot with us?

It’s been so long and I no longer have the original script on my HD. It’s pretty trivial to plot the files that get outputted from my code.

hiii… i am new to opencv… so i installed opencv in win10 with codeblocks and ran your programs… it gives me many errors in compiling : line 174 : ‘thread’ in namespace ‘std’ doesnt name a type… so do i need to run it in linux by installing opencv in it? also do i need to install vse to run it in codeblocks?

hi, first of all thank you for sharing this code 🙂

i have one little ‘designing’ question:

why define two different structures with the same fingerprint?

i mean both TransformParam and Trajectory have three doubles and the exact same constructors inside.

i would have defined them as

typedef struct { … } Trajectory, TransformParam;

Most likely I did it that was for clarity, make it obvious to other people reading the code.

i see. thank you for your prompt answer.

in my code i ended up with this namespace:

namespace vst {

////////////////////////////////////////////////////////////////////////

const size_t SMOOTH_FRAMES(2);

////////////////////////////////////////////////////////////////////////

typedef struct TRANSFORM {

TRANSFORM();

TRANSFORM(const double x, const double y, const double a);

TRANSFORM(const TRANSFORM& source);

TRANSFORM& operator =(const TRANSFORM& source);

TRANSFORM& operator +=(const TRANSFORM& source);

TRANSFORM& operator -=(const TRANSFORM& source);

bool operator ==(const TRANSFORM& source) const;

bool isId() const;

void dump(std::ostream& file) const;

double x; // horizontal translation //

double y; // vertical translation //

double a; // anti-clockwise rotation //

} TRANSFORM, TRAJECTORY;

////////////////////////////////////////////////////////////////////////

TRANSFORM operator +(const TRANSFORM& left, const TRANSFORM& right);

TRANSFORM operator -(const TRANSFORM& left, const TRANSFORM& right);

TRANSFORM operator /(const TRANSFORM& left, const double right);

TRANSFORM operator *(const TRANSFORM& left, const double right);

TRANSFORM operator *(const double left, const TRANSFORM& right);

////////////////////////////////////////////////////////////////////////

typedef std::vector STABILIZATION;

////////////////////////////////////////////////////////////////////////

void dump(std::ostream& file, const STABILIZATION& list);

void save(const std::string path, const STABILIZATION& list);

void load(const std::string path, STABILIZATION& list);

void vst(const std::string path, STABILIZATION& list, const bool autoLoad = true);

////////////////////////////////////////////////////////////////////////

} // namespace vst //

hi Nghia,

i have tried out the OpticalFlow source codes to detect jitter in a video, but i dont’ think it has managed to detect the presence of the jitter. The sample video file that i’m using is from youtube: https://www.youtube.com/watch?v=lOygQ3qUHXc.

Is Optical Flow the right way to detect jitter or there are other functions that should be used?

By the way, i have gotten this to work already by subtracting T and T_last in the codes…thanks!

This one is hard because of significant mechanical vibration + rolling shutter.

Hi ! Thank you for your great work !

Thanks to you and the author of the live adapted program I was able to stabilize my live videos from a server. I had to translate the code in python though. 😉

Hi, Would you share the python code?

Can we use this program not only avi but also mp4?

You can use whatever video format OpenCV supports.

Thanks for your great work. I was able to get stable video as expected. Please give reason on why my plots for the x and y trajectories are heading to negative pixels with increase in frame number.

Look at the way the camera moves in the video, you should see a correlation.

Hey!

first of all, thanks a lot about your code and explanation, which are so clear and great.

in the “live version”, i just moved the parameters from main (argc, argv), and change the video capture to VideoCapture cap(0);, which means that my input video is the webcam.

now i have a super important question please :

is there any way to control/change the FPS pamameter?

i just want to make a smoother picture, cause it’s not so smooth.

Thanks a LOT! 🙂

Processing not fast enough to keep up with the video?

it’s ok but not enough.

maybe it’s depand on the screen/graphic card?

my laptop runs the default one, on the mother board (i guess it’s intel HD graphics).

**

but without connection to that, there is no way to control the fps? 🙂

The code is purely CPU and not optimized for speed in anyway, so I would not expect real-time without some work involved.

/can you explain please?

i’m not sure i understood it deeply.

and another question please, the live version – what’s the purpose? it’s not real time actually, so what’s the meaning of live?

Thanks 🙂

It’s not optimized code, so it will run slow. Just a proof of concept. I didn’t write the live version. It has the potential to run in real-time if you try hard enough.

Python version of code:

https://github.com/mrgloom/Video-Stabilization-Example

There is another version of python code with reference to this article:

https://github.com/AdamSpannbauer/python_video_stab

Thank you!

I think we need 2nd part with kalman filter explained.

Hey,

first thank you for your code 🙂 Second, this code works quite well for normal videos. Third is Problem: That algorithm dont work for following kind of videos: Camera mounted directly behind windshield and car drives on a highway or somewhere else with high-speed…Is that a common problem? Do you know a way to solve that? I’ve copied your algorithm and tried it to such a video. Result: Gets more shaky than the original one and partly shifted a huge amount in all directions..Please give me a hint to solve that..Thanks 😉

You may notice I’ve applied it to a video where I was a scooter pointing the camera towards the road and it works well. But to be fair it wasn’t high speed. If things are moving very fast in the video then the optical flow may have trouble tracking them. You can try increasing the optical flow pyramid, but even that may not help.

thanks for it!

Dear Nghia Ho:

I have a puzzle about motion smoothing part:

After jitter value was calculated by the diff of the smoothed motion and the original accumulated motion, the diff is apply on the transform(frame by frame) to get new H, why the img is warped by new H but not accumulated new H?

Thanks

Best Regards

Chuck Ping

With respect to the graph, you want to apply the delta that will transform the red line (noisy) into the green line (smooth).

Hello nghiaho12,

Nice article but I have a question

what is the purpose of k < max_frames-1) { // don't process the very last frame, no valid transform ?

why are we doing this? it would be really helpful if you can help me understand this

Thank you

Hi,

Transforms are between 2 frames eg. prev -> cur. So for the last frame there’s no such transform for last -> last + 1. If you have only 3 frames, then you only 2 transforms. 1 to 2, 2 to 3.