Last Updated on January 8, 2012 by nghiaho12

This post on how to take advantage of the Harris corner to calculate dominant orientations for a feature patch, thus achieving rotation invariance. Some popular examples are the SIFT/SURF descriptors. I’ll present an alternative way that is simple to implement, especially if you’re already using Harris corners.

Background

The Harris corner detector is an old school feature detector that is still used today. Given an NxN pixel patch, and the horizontal/vertical derivatives extracted from it (via Sobel for example), it accumulates the following matrix

where

is the summation of the derivatives in the x direction and

in the y direction for every pixel. The

averages the summation. Mathematically you don’t really need to do this, but in practice due to numerical overflow you want to keep the values in the matrix reasonably small. The 2×2 matrix above is the result of doing the following operation

Let

where and

are the derivatives at pixel n.

Using B we get

You can think of matrix A as the covariance matrix, and the values in B are assumed to be centred around zero.

Once the matrix A is calculated there are a handful of ways to calculate the corner response of the patch, which I won’t be discussing here.

Rotation invariance

With the matrix A, the orientation of the patch can be calculated using the fact that the eigenvectors of A can be directly converted to a rotation angle as follows (note: matrix are index as A(row,col) )

eig1 is the larger of the two eigenvalues, which corresponds to the eigenvector

The eigenvalue/eigenvector was calculated using an algebraic formula I found here.

I’ve found in practice that the above equation results in an uncertainty in the angle, giving two possibilities

angle1 = angle

angle2 = angle + 180 degrees

I believe this is because an eigenvector can point in two directions, both of which are correct. If v is an eigenvector then -v is legit as well. A negation means a 180 degree rotation. So there are in fact two dominant orientations for the patch. So how do we resolve this? We don’t, keep them both!

Example

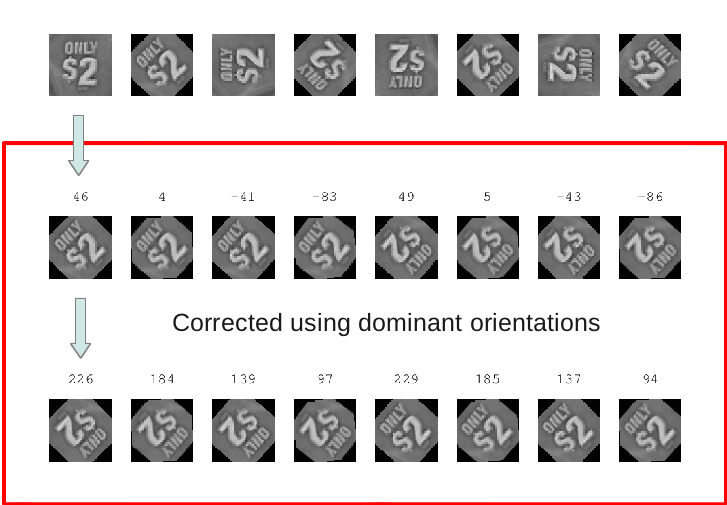

Here’s an example of a small 64×64 patch rotated from 0 to 360, every 45 degrees. The top row is the rotated patch, the second row is the rotation corrected patch using angle1 and the third row using angle2. The numbers at the top of the second/third rows are the angles in degrees of the rotated patches. You can see there are in fact only two possible appearances for the patch after it has been rotated using the dominant orientation.

Interestingly, the orientation angles seem to have a small error. For example, compare patch 1 and patch 5 (counting from the left). Patch 1 and patch 5 differ by 180 degrees, yet the orientations are 46 and 49 degrees respectively, a 3 degree difference. I think this might be due to the bilinear interpolation when I was using the imrotate function in Octave. I’ve tried using an odd size patch eg. 63×63, thinking it might be a centring issue when rotating but still the same results. For now it’s not such a big deal.

Implementation notes

I used a standard 3×3 Sobel filter to get the pixel derivatives. When accumulating the A matrix, I only use pixels within the largest circle (radius 32) enclosed by the 64×64 patch, instead of all pixels. This makes the orientation more accurate, since the corners sometime appear off the image when they are rotated.

Code

Here is the Octave script and image patch used to generate the image above (minus the annotation). Right click and save as to download.