Last Updated on December 26, 2011 by nghiaho12

So it’s Boxing Day and I haven’t got much on, so why not another blog post! yay!

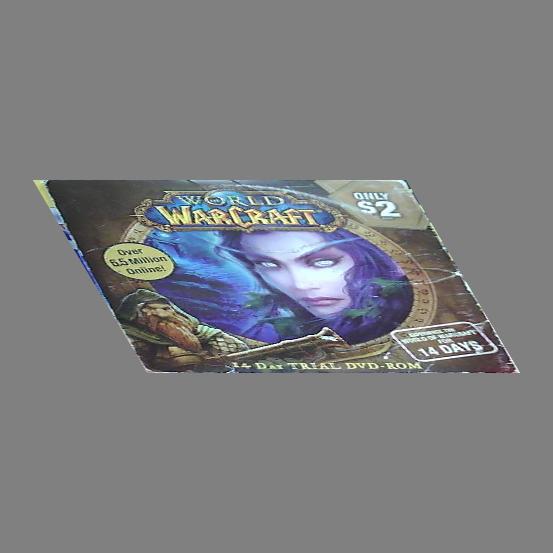

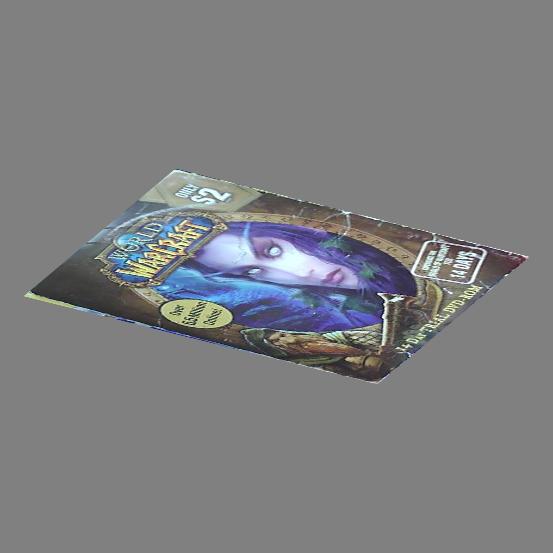

Today’s post is about generating synthetic views of planar objects, such as a book. I needed to do whilst implementing my own version of the Fern algorithm. Here are some references, for your reference …

- M. Ozuysal, M. Calonder, V. Lepetit and P. Fua, Fast Keypoint Recognition using Random Ferns, IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 32, Nr. 3, pp. 448 – 461, March 2010.

- M. Ozuysal, P. Fua and V. Lepetit, Fast Keypoint Recognition in Ten Lines of Code, Conference on Computer Vision and Pattern Recognition, Minneapolis, MI, June 2007.

Also check out their website here.

In summary it’s a cool technique for feature matching that is rotation, scale, lighting, and affine viewpoint invariant, much like SIFT, but does it in a simpler way. Fern does this by generating lots of random synthetic views of the planar object and learns (semi-naive Bayes) the features extracted at each view. Because it has seen virtually every view possible, the feature descriptor can be very simple and does not need to be invariant to all the properties mentioned earlier. In fact, the feature descriptor is made up of random binary comparisons. This is in contrast to the more complex SIFT descriptor that has to cater for all sorts of invariance.

I wrote a non-random planar view generator, which is much easier to interpret from a geometric point of view. I find the idea of random affine transformations tricky to interpret, since they’re a combination of translation/rotation/scalings/shearing. My version treats the planar object as a flat piece of paper in 3D space (with z=0), applies a 3×3 rotation matrix (parameterise by yaw/pitch/roll), then re-projects to 2D using an orthographic projection/affine camera (by keeping x,y and ignoring z). I do this process for all combinations of scaling, yaw, pitch, roll I’m interested in.

I use the following settings for Fern

- scaling of 1.0, 0.5, 0.25

- yaw, 0 to 60 degrees, increments of 10 degrees

- pitch, 0 to 60 degrees, increments of 10 degrees

- roll, 0 to 360 degrees, increments of 10 degrees

There’s not much point going beyond 60 degrees for yaw/pitch, you can hardly see the object.

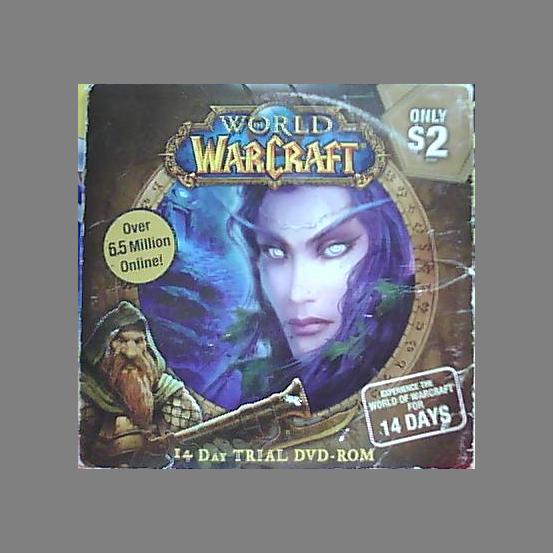

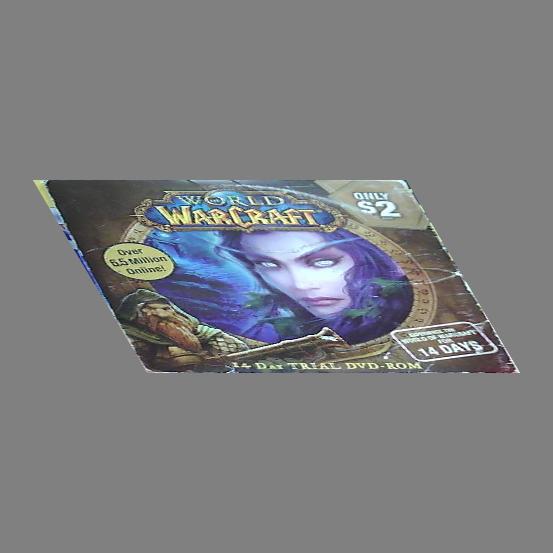

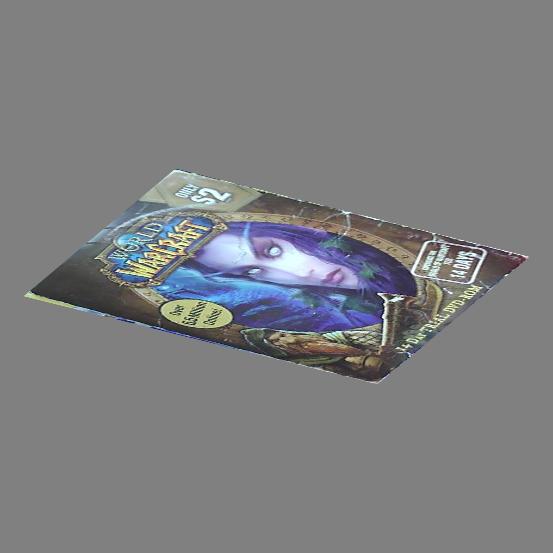

Here are some example outputs

Download

You can download my demo code here SyntheticPlanarView.tar.gz

You will need OpenCV 2.x installed, and compile using the included CodeBlocks project or an IDE of your choice. Run it on the command line with an image as the argument. Hit any key to step through all the view generated.